Advertisement

Language models have changed the way computers understand human speech and writing. They’ve made it easier for machines to follow what we say, generate text that sounds natural, and even help people translate languages or summarize long passages. Two major types of models stand out in this space: LLMs (Large Language Models) and BERT (Bidirectional Encoder Representations from Transformers).

While they often get mentioned in the same breath, they work differently and serve different purposes. Looking at how they are used in real-world tasks reveals not only what they can do but also where each model fits best.

Before looking at what these models can do, it helps to understand what makes them tick. BERT came out of Google in 2018 and changed how machines read language. Instead of scanning a sentence from left to right, it looks at all the words at once—both before and after each word. This gives it a better grasp of meaning, especially in sentences where context matters. BERT isn’t built to write full paragraphs. It’s more like a sharp reader—great at picking up tone, tagging sentences, or pulling out key details from short text.

LLMs, or Large Language Models, work differently. They're built on the same transformer idea but stretched much further. Models like GPT-3 and GPT-4 read a massive amount of text during training, including books, websites, and conversations. Their goal is to guess the next word in a sentence, which ends up teaching them how to write the way people do. Unlike BERT, these models don't just understand—they can talk back, write essays, and hold a conversation. They're trained to respond in full sentences, not just label or classify.

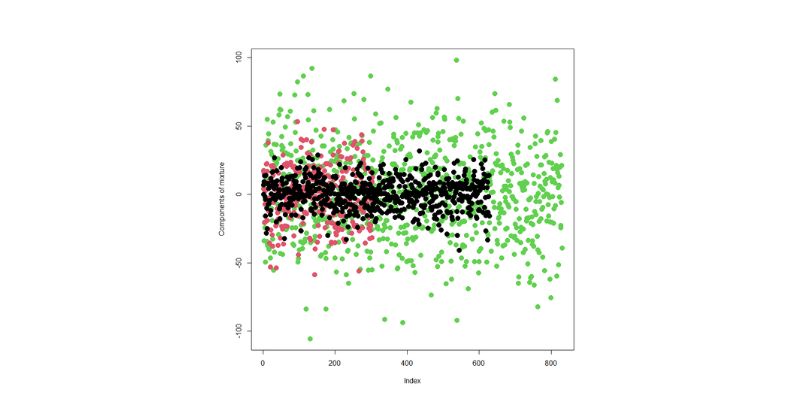

The tasks for which these models are used can be broadly divided into understanding and generation. BERT excels at understanding. Tasks such as sentiment analysis, entity recognition, and sentence classification are where BERT typically performs best. For example, if a company wants to scan thousands of customer reviews and identify which ones are complaints, BERT can be trained to do that very effectively. It identifies patterns, pinpoints keywords, and learns from labeled data. It's fast and efficient for these kinds of jobs.

LLMs, on the other hand, shine in more open-ended applications. Writing an article, creating a summary from a long report, answering questions in full paragraphs, or chatting with users in a natural way—all these require generating coherent, fluid text. These models understand context over longer stretches of text and can respond in a way that closely mimics human writing. While BERT might struggle to generate a paragraph that makes sense from start to finish, LLMs are designed for that kind of task.

There are overlaps, too. Both BERT and LLMs can handle question answering, but the type of question matters. For short, fact-based answers—like "What year was the Eiffel Tower built?"—BERT can retrieve and highlight the right sentence quickly. For more elaborate questions—like "What are the main themes in a novel?"—LLMs are better equipped because they can reason and generate longer explanations.

Another major point of comparison is how these models are fine-tuned and adapted for real-world use. BERT is usually fine-tuned on specific tasks with labeled data. This means it learns from examples where the right answer is already known. For example, a model might be trained to recognize spam by feeding it a set of emails marked “spam” and “not spam.” The training process adjusts BERT’s parameters so it becomes better at that task over time.

LLMs can be fine-tuned as well, though they often perform well even without task-specific training. Some are used “out of the box,” meaning they rely entirely on what they learned during their original training. Others are fine-tuned or prompted using a method called few-shot learning—where just a few examples are enough to guide the model. This flexibility makes LLMs easier to apply in varied contexts, though they also require more computational power and care to avoid generating inaccurate or misleading information.

One real-world trend is the use of LLMs as the base model, with task-specific instructions layered on top. For example, instead of training a whole new model for customer service, a company might prompt an LLM with guidelines and tone preferences. This approach saves time and makes the system easier to update as needs change.

While both models are powerful, they aren’t perfect. BERT, despite its skill in comprehension, is limited in range. It doesn’t handle longer text very well and can’t produce fluent, natural responses. LLMs are more flexible but require more resources and can sometimes generate false or biased content, especially if not carefully managed.

In many production environments, the choice between LLMs and BERT depends on trade-offs. If the goal is fast, accurate analysis of short texts—like sorting emails or tagging posts—BERT might be the better fit. If the goal is to provide user-facing content, such as writing suggestions or conversational agents, LLMs are the tool of choice.

The line between these models continues to blur as research evolves. There are now hybrid models and fine-tuned versions that combine the strengths of both. Developers and researchers are finding smarter ways to use them together, assigning each the tasks it handles best. These models are not replacing one another but rather creating a more layered and flexible approach to language tasks.

The rise of LLMs and BERT has made language processing far more accessible and adaptable. They handle different kinds of work, with BERT focusing more on understanding and classification and LLMs built for generation and dialogue. Each model brings unique strengths, and their use depends on the demands of the task. As both continue to develop, their role in everyday applications will only grow—quietly working in the background of search engines, writing tools, customer service platforms, and more. The real progress isn't about one model winning over the other but about learning where and how each one fits.

Advertisement

Learn how integrating feature selection into model estimation improves accuracy, reduces noise, and boosts efficiency in ML

Learn how to compare two regression models with statistical significance for accuracy, reliability, and better decision-making

Discover the exact AI tools and strategies to build a faceless YouTube channel that earns $10K/month.

How AI middle managers tools can reduce administrative load, support better decision-making, and simplify communication. Learn how AI reshapes the role without replacing the human touch

Get full control over Python outputs with this clear guide to mastering f-strings in Python. Learn formatting tricks, expressions, alignment, and more—all made simple

How to approach AI implementation in the workplace by prioritizing people. Learn how to build trust, reduce uncertainty, and support workers through clear communication, training, and role transitions

Discover the best AI art generator tools available today. Learn how you can create AI art from text prompts using powerful, easy-to-use platforms suited for beginners and pros alike

The era of prompts is over, and AI is moving toward context-aware, intuitive systems. Discover what’s replacing prompts and how the future of AI interfaces is being redefined

How the Model-Connection Platform (MCP) helps organizations connect LLMs to internal data efficiently and securely. Learn how MCP improves access, accuracy, and productivity without changing your existing systems

How using open-source AI models can give your startup more control, lower costs, and a faster path to innovation—without relying on expensive black-box systems

Sam Altman returns as OpenAI CEO amid calls for ethical reforms, stronger governance, restored trust in leadership, and more

IBM showcased its agentic AI at RSAC 2025, introducing a new approach to autonomous security operations. Learn how this technology enables faster response and smarter defense