Advertisement

Amazon Web Services (AWS) has introduced new GenAI tools to accelerate AI project development. These tools offer robust model training and image generation capabilities, streamlining complex workflows through integrated cloud technologies and automation. With minimal effort, developers can build advanced AI pipelines using upgraded Amazon SageMaker and Amazon Bedrock features.

These enhancements provide scalable, high-performance options for enterprise and research applications. AWS's reliable infrastructure ensures faster, more consistent results, while cloud-native customization enables more flexible model training. Altogether, these updates aim to deliver seamless use case implementation as AWS continues to lead in generative AI innovation.

Amazon Bedrock now supports modern image-generation models that produce high-quality graphics in seconds. Leading companies such as Stability AI offer foundation models for developers through Bedrock. These models generate realistic, highly detailed visuals from simple text prompts. Through enterprise-grade integration, AWS guarantees security and scalability. Bedrock lets generative capabilities be tuned in real-time without sophisticated ML understanding. Built-in APIs let teams iterate and rapidly test photos. The tool supports several formats for business and scientific requirements.

Less operational issues during deployment speed things up. UI by Bedrock streamlines results in control and prompt design. With minimum preparation, users can create thousands of original images. Images can be found in retail, gaming, and marketing, among other sectors. Data privacy protections available on AWS guarantee protection and compliance. Transparency comes from cost restrictions and usage tracking. Productivity rises as seamless integration with AWS processes becomes second nature. For those wanting fast, scalable image production, Bedrock is perfect. It represents a significant advancement in visual artificial intelligence applications housed on clouds.

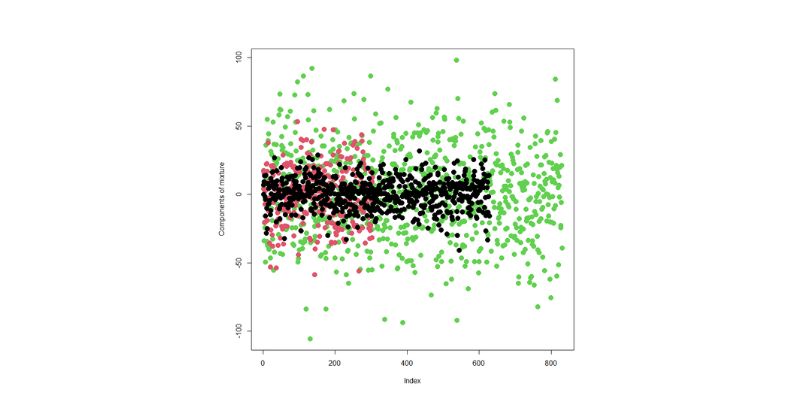

Amazon SageMaker now offers enhanced capabilities for fast and accurate model training. It supports more efficient, advanced generative AI models, with training distribution and auto-scaling reducing infrastructure needs and setup time. Model parallelism enables SageMaker to train large models without memory limitations. Developers have full control over model behavior and testing, with built-in real-time metrics for accuracy, latency, and loss. With clever techniques, hyperparameter adjustment requires less computation. It also now supports open-source, seamless import libraries and Hugging Face.

Teams can hone models for particular jobs, such as picture captioning, translation, and summarizing. For vast quantities, SageMaker interacts with Amazon S3. Models can be used straight at endpoints for live applications. By default, incorporate security and compliance tools. Debugging benefits from tracking errors and log entries. These improvements help SageMaker be more adaptable for various artificial intelligence initiatives. GenAI updates from AWS streamline both deployment and training precisely.

AWS supports large-scale GenAI initiatives through enhanced cloud-based collaboration tools. Teams can collaboratively develop models within shared environments in Amazon SageMaker Studio. Role-based access controls ensure secure collaboration across departments within projects. To accelerate development tasks, AWS CodeWhisperer integrates with IDEs. During training, version control supports model checkpointing and recovery. Scalable computational resources let workloads change depending on demand in real-time—fewer delays help projects be more easily controlled. Support from several regions improves compliance and resilience among teams worldwide. For datasets and output files, Amazon EFS and FSx streamline file sharing.

Collaborative notebooks in AWS support visual debugging, live annotations, and real-time documentation. Multiple users can run tests simultaneously without conflicts. The AWS Management Console offers real-time monitoring of resource usage. One can control budgets and quotas either team-wise or project-wise. These qualities provide a consistent stage for international artificial intelligence cooperation. While keeping total control, developers save time. On challenging generative AI projects, AWS facilitates scale and collaboration.

AWS GenAI tools now streamline real-time inference and model deployment. Amazon SageMaker Inference delivers low-latency predictions through multi-model endpoints. It enables developers to deploy multiple models on a single endpoint. It greatly lowers latency and saves computing. Load balancing guarantees great availability during maximum use. Real-time inference allows language translation, image labeling, and chatbots, among other uses. Scaling occurs naturally under elastic container support. IAM roles for API access, VPC isolation, and encryption are among the security measures.

AWS Lambda is linked to event-based inference logic. Integration of CloudWatch facilitates live performance and error rate monitoring. One might maximize model containers for GPU acceleration. Model updates can be deployed without incurring downtime. Models are also kept under constant watch for performance decline or drift. These characteristics increase user experience and cut time to market. AWS's strong backend helps real-time apps remain responsive and efficient. At scale, production-grade GenAI services would find it excellent.

AWS provides configurable SDKs and APIs to enable unique GenAI processes. Developers can use AWS SDKs for Python, Java, and other languages. These SDKs allow automated execution of image generation and model training pipelines. One can construct event-driven models with Step Functions or AWS Lambda. APIs let data inputs and outputs be finely tuned under control. Workflows can be designed with error handling and conditional logic. Both AWS Glue and Athena allow data preparation to be included.

From one interface, users may coordinate multiple-step actions. Blueprints and templates enable novices to start fast. Advanced users get from AI tool customizing at the SDK level. AWS Secrets Manager safely controls API keys and tokens. AWS CloudTrail records streamline monitoring. You can create alerts for breakdowns in workflow. These tools enable real-time and batch tasks alike. Developers can tailor GenAI services to meet specific enterprise requirements. The rich API environment of AWS allows artificial intelligence integration to be scalable and versatile for all users.

AWS has reinterpreted generative artificial intelligence using fresh tools for model training and visual creation. Updates on Amazon Bedrock and SageMaker provide users with speed, control, and scalability. These solutions streamline artificial intelligence development in several sectors. The workflow stays flawless and safe from training to deployment. Advanced possibilities, including cloud collaboration and real-time inference, support faster delivery. Companies can now innovate without the burden of managing complex infrastructure.

Advertisement

Get full control over Python outputs with this clear guide to mastering f-strings in Python. Learn formatting tricks, expressions, alignment, and more—all made simple

AI saved Google from facing an antitrust breakup, but the trade-offs raise questions. Explore how AI reshaped Google’s future—and its regulatory escape

Learn how integrating feature selection into model estimation improves accuracy, reduces noise, and boosts efficiency in ML

How does an AI assistant move from novelty to necessity? OpenAI’s latest ChatGPT update integrates directly with Microsoft 365 and Google Workspace—reshaping how real work happens across teams

Discover the best AI art generator tools available today. Learn how you can create AI art from text prompts using powerful, easy-to-use platforms suited for beginners and pros alike

Google risks losing Samsung to Bing if it fails to enhance AI-powered mobile search and deliver smarter, better, faster results

Curious how LLMs learn to write and understand code? From setting a goal to cleaning datasets and training with intent, here’s how coding models actually come together

What's fueling the wave of tech layoffs in 2025, from overhiring during the pandemic to the rise of AI job disruption and shifting investor demands

Snowflake's acquisition of Neeva boosts enterprise AI with secure generative AI platforms and advanced data interaction tools

How AI middle managers tools can reduce administrative load, support better decision-making, and simplify communication. Learn how AI reshapes the role without replacing the human touch

Can small AI agents understand what they see? Discover how adding vision transforms SmolAgents from scripted tools into adaptable systems that respond to real-world environments

How IonQ advances AI capabilities with quantum-enhanced applications, combining stable trapped-ion technology and machine learning to solve complex real-world problems efficiently