Advertisement

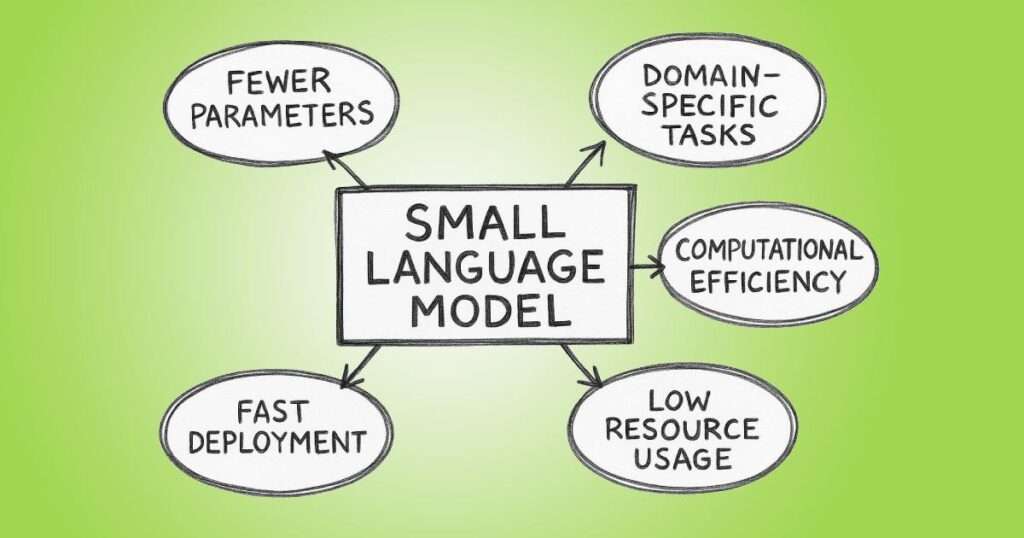

If you've worked with large language models before, you're probably familiar with the balance game: the faster the model, the weaker the results—unless you’re willing to throw enormous resources at it. That’s why SmolLM gets people's attention right out of the gate. It doesn't pretend to compete with the absolute largest models out there, but what it does promise is that it delivers—quickly and efficiently. So, what's different here? Why are developers and engineers getting excited? It's not just about speed; it's about what gets done while being fast.

Let’s start with the obvious: speed. SmolLM runs quickly, even on machines without top-tier hardware. That’s not just a nice-to-have; it changes the way you can use it in real-time applications. Imagine building a product where every millisecond counts. With larger models, you’re usually stuck waiting or trimming down your prompts just to keep the thing moving. SmolLM flips that. You can feed it a reasonably sized prompt and still get a response without grinding everything to a halt.

But speed alone wouldn't be enough. What's impressive is how much language understanding it retains despite being lightweight. Smaller models often drop nuance or miss context, but SmolLM holds on to more than you'd expect. You get responses that are clean, concise, and surprisingly coherent for something that doesn’t chew through your RAM.

Another plus? Local deployment. You’re not tethered to a cloud service, which means more control, lower latency, and no surprise bills for API calls. Whether you're working on a privacy-focused application or just want something that runs offline, SmolLM can keep up.

A big complaint among developers working with LLMs is how bloated things have become. Between loading times, prompt optimization tricks, and keeping track of context limits, you're doing more meta-work than actual work. SmolLM doesn’t drag you through that. You spin it up, and it’s ready to go. That’s refreshing.

The model supports standard interfaces, so integrating it into existing tools doesn't turn into a weekend project. You're not stuck dealing with some obscure framework or re-learning how to write prompts. It's compatible with what most developers already use, which saves time—not just during setup but every time you touch the code later on.

You also get faster iterations. Since it responds quicker and consumes fewer resources, testing ideas becomes more fluid. Want to build a chatbot and test ten different tones of voice? You can actually do that without overheating your machine or waiting around. That kind of feedback loop makes development smoother. You experiment more because you can afford to, not because you're trying to squeeze out the last ounce of productivity before your GPU catches fire.

Behind the scenes, SmolLM uses an optimized architecture that cuts down on the heavy lifting most models require. That's a big part of how it stays nimble. It's not just that it's a small model—it’s a smartly designed one. That difference matters.

There’s quantization involved, which reduces the size of the model without wrecking its accuracy. Normally, when you hear "quantized," you expect the output to get choppy or lose detail. But SmolLM’s performance holds up, even with that compression. It has been fine-tuned to maintain output quality, especially for everyday tasks such as summarization, question answering, or basic conversation generation.

Then there’s the token management. SmolLM is smart about how it handles tokens, trimming waste while keeping key information intact. That plays a significant role in speed, especially when handling long or complex inputs. It knows when to skim and when to focus—without you having to micromanage it.

Lastly, it doesn't rely on external APIs or cloud-based runtime layers to maintain efficiency. That means once you’ve got it set up, it's self-contained. It won’t surprise you with updates that break compatibility or require some random dependency to be reinstalled.

If you're ready to give it a shot, setting up SmolLM is surprisingly direct. Here’s a simplified overview of how to get started:

You'll first need to download the model weights. These are typically available through repositories like Hugging Face or from SmolLM's official site. Make sure you grab the right quantization level for your device.

Depending on your setup, you can use tools like llama.cpp or ggml to run the model locally. These backends are optimized for performance, and they make good use of your CPU (or GPU, if supported).

Most backends will provide a simple CLI or Python wrapper to load the model. Point it to the downloaded weights, and it should spin up fairly quickly.

Once loaded, try a basic prompt to see how it responds. You can test things like summarizing a paragraph or generating a quick response. If you're happy with the speed and quality, you're good to go.

From here, you can plug it into a chatbot, use it for preprocessing tasks, or wrap it inside an API for more structured use. Since it's light, it won't hold your stack back.

SmolLM isn’t trying to be the biggest model on the block. And that’s exactly why it works. It runs fast, delivers meaningful output, and doesn’t get in your way. You don’t need specialized hardware or a giant cloud bill to use it. And while it won’t replace the heavyweight models for everything, it covers more ground than you might think—for far less effort.

For anyone who’s tired of fighting with bloated models or just wants something that responds fast and plays well with local tools, SmolLM is worth a serious look. It’s not flashy, but it gets the job done—quietly, quickly, and without much fuss.

Advertisement

How to approach AI implementation in the workplace by prioritizing people. Learn how to build trust, reduce uncertainty, and support workers through clear communication, training, and role transitions

How can vision-language models learn to respond more like people want? Discover how TRL uses human preferences, reward models, and PPO to align VLM outputs with what actually feels helpful

How IBM expands AI features for the 2025 Masters Tournament, delivering smarter highlights, personalized fan interaction, and improved accessibility for a more engaging experience

Discover the best AI art generator tools available today. Learn how you can create AI art from text prompts using powerful, easy-to-use platforms suited for beginners and pros alike

Snowflake's acquisition of Neeva boosts enterprise AI with secure generative AI platforms and advanced data interaction tools

Learn how to install, configure, and run Apache Flume to efficiently collect and transfer streaming log data from multiple sources to destinations like HDFS

How serverless GPU inference is transforming the way Hugging Face users deploy AI models. Learn how on-demand, GPU-powered APIs simplify scaling and cut down infrastructure costs

How to classify images from the CIFAR-10 dataset using a CNN. This clear guide explains the process, from building and training the model to improving and deploying it effectively

How AI middle managers tools can reduce administrative load, support better decision-making, and simplify communication. Learn how AI reshapes the role without replacing the human touch

How AI is changing the future of work, who controls its growth, and the hidden role venture capital plays in shaping its impact across industries

How IonQ advances AI capabilities with quantum-enhanced applications, combining stable trapped-ion technology and machine learning to solve complex real-world problems efficiently

Learn the top 5 AI change management strategies and practical checklists to guide your enterprise transformation in 2025.