Advertisement

Over the past year, the pace of progress in language models has been relentless. Much of the spotlight has focused on closed commercial systems. But while those models dominate headlines, a different kind of progress is happening in the open-source world. MosaicML’s MPT-7B and MPT-30B are a direct response to the need for large, capable models that anyone can inspect, deploy, or fine-tune on their hardware. These two models show how far open-source LLM technology has come, and they're a strong sign of where it's going next.

MPT, short for "Mosaic Pretrained Transformer," follows the architectural foundations laid by earlier transformer-based models but adds its updates. MPT-7B is a 7-billion parameter model trained to handle a wide range of general language tasks, while MPT-30B scales that up significantly with 30 billion parameters, enabling more advanced comprehension and generation capabilities.

Unlike many open-source models that are only pre-trained, both versions of MPT were designed with fine-tuning in mind. This gives developers and researchers more freedom. Whether you need a chat interface, code generation, or summarization, you can adjust the model to suit the task. MosaicML made this easier by releasing variations like MPT-7B-Instruct and MPT-7B-StoryWriter, which show how the base model can be shaped for different purposes.

One of the most important differences between MPT and other models is the attention to how the models were trained. MosaicML focused on efficiency. MPT models were trained using FlashAttention, a technique that reduces memory overhead while maintaining high throughput. This allows for longer context windows—up to 65,000 tokens in some cases—without a dramatic increase in hardware requirements. That extended context makes these models particularly useful for long-form documents or large datasets, something earlier models struggled to handle well.

At their core, both MPT-7B and MPT-30B follow a decoder-only transformer architecture similar to what is used in GPT models. However, MosaicML didn't just copy existing designs. The training dataset for MPT was broad and included a mix of Common Crawl, Wikipedia, books, and code, giving the models a better balance between general language understanding and domain-specific use.

The pretraining process was also optimized to be more efficient. MPT models were trained on the MosaicML Cloud, allowing for rapid iteration and greater control over the computing environment. FlashAttention is one key factor behind this efficiency. Instead of processing all token pairs in full, it uses a kernel-level approach to compute attention more quickly and with reduced memory usage. The result is a model that can manage longer inputs and still run on more accessible hardware.

This attention to practicality extends to the deployment side as well. MPT models are fully open-source under a permissive license (Apache 2.0), and they come with documentation, inference scripts, and training recipes. That level of openness is rare in a landscape where many high-performing models come with restrictive terms or are locked behind APIs.

In terms of real-world performance, MPT-7B performs competitively with other 7B models like LLaMA and Falcon. MPT-30B, though newer and less tested, is showing strong early results across a range of tasks. Its ability to handle complex instructions, maintain context over long spans, and adapt to fine-tuning makes it suitable for enterprise use, research, and creative applications. The larger model shows especially strong performance in zero-shot and few-shot tasks—areas where smaller models often falter.

Since their release, MPT-7B and MPT-30B have been adopted across several domains. Startups use MPT-7B-Instruct to power chatbots, customer service tools, and virtual assistants. Educational groups are testing the model in learning tools that summarize material or generate practice questions. Developers working on code generation tools benefit from fine-tuning models on repositories in languages such as Python, JavaScript, and SQL.

Open access to the models has driven more than adoption—it’s sparked a growing ecosystem of tools and experiments. Users are building fine-tuned versions, benchmarking them across academic tasks, and integrating them with platforms like LangChain and Hugging Face. This strengthens the models, as feedback loops help uncover edge cases, weaknesses, and areas where fine-tuning works best.

Another major appeal of MPT models is flexibility across hardware. While some models need high-end GPU clusters, MPT-7B is optimized for single-node inference. This means users with consumer-grade setups can still experiment, deploy, or build applications. It expands access and lets smaller teams or individuals take part in LLM development.

Security and safety matter, too. MosaicML has provided tools to manage output, reduce toxic content, and adjust model behavior through reinforcement learning or rule-based filters. These don’t solve everything but give developers a solid foundation instead of starting from scratch.

MPT-7B and MPT-30B are not the final word in open-source LLMs, but they raise the bar. They prove that it’s possible to combine scale, flexibility, and accessibility without needing massive budgets or proprietary walls. Their existence also pressures other model developers—especially those in the open-source space—to be more thoughtful about documentation, training transparency, and licensing.

As attention shifts toward models that can handle multimodal inputs, reason over long-term contexts, or operate with fewer resources, the lessons from MPT are likely to influence future designs. The continued development of training tools like Composer and streaming datasets built by MosaicML suggests that the ecosystem is growing in ways that encourage sustainable progress, not just bigger models.

Open-source LLM technology is becoming less of a rough alternative and more of a serious contender. What MPT-7B and MPT-30B have shown is that you don’t need to sacrifice performance to gain openness. And with communities of developers already building on them, these models might help shift the AI conversation from competition to collaboration.

MPT-7B and MPT-30B mark a shift in open-source AI by combining strong performance with real usability. These models are adaptable, transparent, and free from heavy restrictions, making them accessible to researchers, developers, and smaller teams. Their design supports meaningful work without large budgets, opening AI development to a broader audience. As demand for large language models grows, MPT helps ensure open access remains part of the future.

Advertisement

Explore the different Python exit commands including quit(), exit(), sys.exit(), and os._exit(), and learn when to use each method to terminate your program effectively

How does Docmatix reshape document understanding for machines? See why this real-world dataset with diverse layouts, OCR, and multilingual data is now essential for building DocVQA systems

How AI is changing the future of work, who controls its growth, and the hidden role venture capital plays in shaping its impact across industries

Snowflake's acquisition of Neeva boosts enterprise AI with secure generative AI platforms and advanced data interaction tools

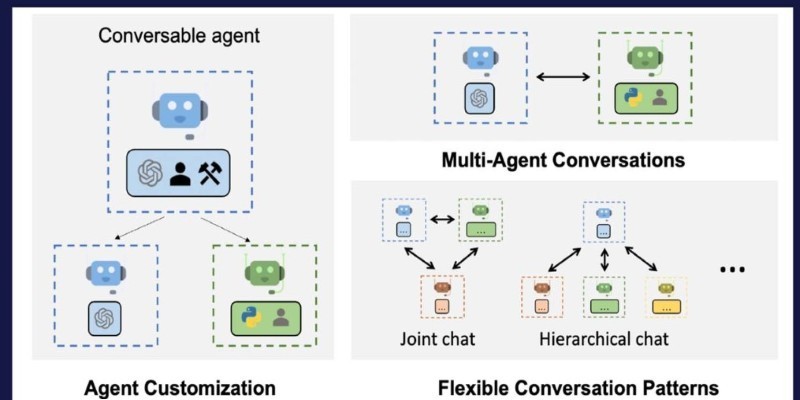

How Building Multi-Agent Framework with AutoGen enables efficient collaboration between AI agents, making complex tasks more manageable and modular

How LLMs and BERT handle language tasks like sentiment analysis, content generation, and question answering. Learn where each model fits in modern language model applications

Ready to make computers see like humans? Learn how to get started with OpenCV—install it, process images, apply filters, and build a real foundation in computer vision with just Python

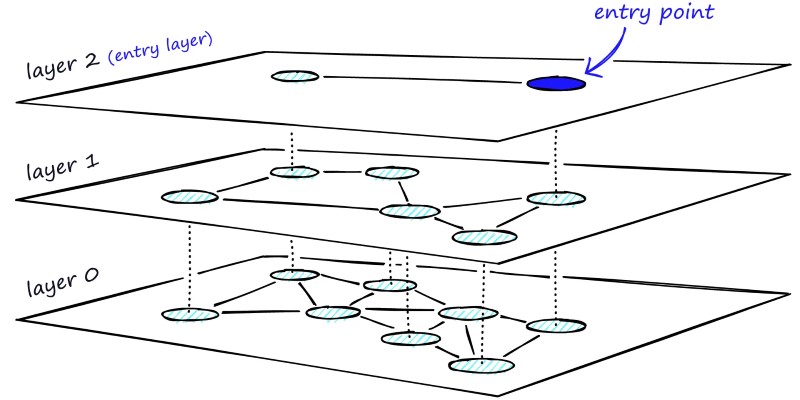

Learn how HNSW enables fast and accurate approximate nearest neighbor search using a layered graph structure. Ideal for recommendation systems, vector search, and high-dimensional datasets

AI saved Google from facing an antitrust breakup, but the trade-offs raise questions. Explore how AI reshaped Google’s future—and its regulatory escape

How using open-source AI models can give your startup more control, lower costs, and a faster path to innovation—without relying on expensive black-box systems

How IBM and L’Oréal are leveraging generative AI for cosmetics to develop safer, sustainable, and personalized beauty solutions that meet modern consumer needs

How does an AI assistant move from novelty to necessity? OpenAI’s latest ChatGPT update integrates directly with Microsoft 365 and Google Workspace—reshaping how real work happens across teams