Advertisement

Getting models trained at scale doesn't have to be slow or complicated. NVIDIA DGX Cloud gives you access to H100 GPUs without needing your setup. No server racks, no hardware upgrades, and no delays. Just fast, stable infrastructure through a browser or terminal. Whether you're training a large model, refining a smaller one, or running experiments, the process becomes quicker and more focused. This setup helps avoid downtime and long queue times. If you're building modern AI systems, the ability to Easily Train Models with H100 GPUs on NVIDIA DGX Cloud makes a real difference in speed and efficiency.

NVIDIA DGX Cloud brings serious hardware within reach. H100 GPUs are built for large-scale AI work. They offer improvements in throughput, energy use, and memory handling over previous models. With native support for Transformer operations and faster interconnects, they're tuned for the deep learning landscape—LLMs, diffusion models, and vision-language tasks all benefit from this setup.

Each DGX Cloud node typically includes eight H100s, pre-configured for deep learning workloads. Everything’s optimized out of the box, and you don’t need to mess with dependencies or drivers. The NVIDIA software stack handles it—containers, frameworks, and management tools, such as Base Command, are all included.

This matters because models have grown. Many go well beyond 10 billion parameters, and training them on older or shared hardware can feel like dragging a boulder uphill. DGX Cloud gives you consistent access to resources, which means fewer interruptions and more useful time spent developing.

The hardware is matched with clean software integration. You can run workloads directly on the platform without switching tools or converting formats. PyTorch, TensorFlow, JAX, and Hugging Face Transformers all run well on this stack. The system’s reliability also reduces failed runs, and that stability becomes more valuable the more complex your model gets.

What makes it possible to Easily Train Models with H100 GPUs on NVIDIA DGX Cloud isn’t just the hardware—it's how much time and effort it saves. The platform reduces setup, testing, and scaling delays. You can launch your workspace fast and keep your models training without interruption.

For most teams, it's not just one model being trained. It's a mix of architectures, parameter counts, and dataset combinations. You might be comparing finetunes, trying new tokenizers, or running ablations. DGX Cloud supports that kind of work by offering predictable performance and simple environment cloning.

The H100s support FP8 and mixed-precision training, which allows for faster computation and reduced memory use without hurting model quality. For transformer-heavy architectures, this can double effective throughput. That means you can test more ideas in less time and still keep training costs down.

Scalability is built-in. Whether you're testing a few models or scaling up for a full release, DGX Cloud doesn’t slow down. If you need more computing, you scale out. If you're done, you scale down. No need to pre-plan months in advance or fight over GPU reservations.

The platform also supports shared workspaces. Team members can run experiments from different locations without syncing files manually. Logs, checkpoints, and settings stay available across sessions. This speeds up review, troubleshooting, and iteration.

High-performance training used to mean buying expensive hardware, building clusters, and maintaining them. DGX Cloud changes that. You use what you need when you need it. No capital expense and no repair cycles. This shift toward usage-based infrastructure means more teams can access high-end resources without big commitments.

Collaboration becomes easier when everything runs in the same place. Whether your team is small or distributed, everyone sees the same logs, checkpoints, and environments. That makes debugging and reviewing faster. DGX Cloud also integrates with common workflow tools, so there's no need to build a custom stack just to track progress.

Security and governance are often overlooked during model training, but they’re part of the DGX Cloud package. You can control access, review usage, and connect to secure storage if needed. This helps when your data is sensitive or when you’re working in a regulated space.

From a framework point of view, the platform is open. You’re not locked into one ecosystem. Models trained locally can be scaled up on DGX Cloud with minimal change. Whether you're using plain PyTorch, tools like Optimum Intel, or full-stack MLOps solutions, everything plugs in smoothly.

The containers available through NGC are tuned for the H100s, which gives an added performance boost without needing deep hardware knowledge. You bring the model, they provide the muscle.

There are times when every hour counts—submissions, proofs-of-concept, or last-minute changes. DGX Cloud helps by staying consistent and fast. You don’t wait in line, restart failed jobs, or tweak memory limits to make things fit.

If you’re training vision models with large backbones or working on LLMs with instruction tuning, the speed from H100s affects your schedule. It’s not just about faster epochs; it's about better throughput and fewer dropped runs. You can train longer or test more ideas within the same time and budget.

For retrieval-augmented generation or fine-tuning variants, that kind of speed brings clearer results, quicker decisions, and more productive cycles. Researchers building datasets, labs reviewing outputs, and companies testing LLM logic all benefit from less idle time.

The value becomes clear during iterative training. If you're running hundreds of short experiments or swapping datasets daily, a fast, stable platform avoids downtime. You don’t waste time waiting or rewriting code to fit limits.

This is why many strong results in large model training come from teams using platforms like DGX Cloud. The hardware, with solid infrastructure, leaves room to experiment without getting blocked.

If you're focused on training deep learning models efficiently, the ability to Easily Train Models with H100 GPUs on NVIDIA DGX Cloud removes friction. You get fast hardware, a pre-tuned software stack, and support for both small experiments and large-scale runs. No hardware setup and no GPU wait times. Whether you're solo or in a team, it streamlines AI development and lets you move faster without compromising on results.

Advertisement

How a machine learning algorithm uses wearable technology data to predict mood changes, offering early insights into emotional well-being and mental health trends

Learn the top 5 AI change management strategies and practical checklists to guide your enterprise transformation in 2025.

Accelerate AI with AWS GenAI tools offering scalable image creation and model training using Bedrock and SageMaker features

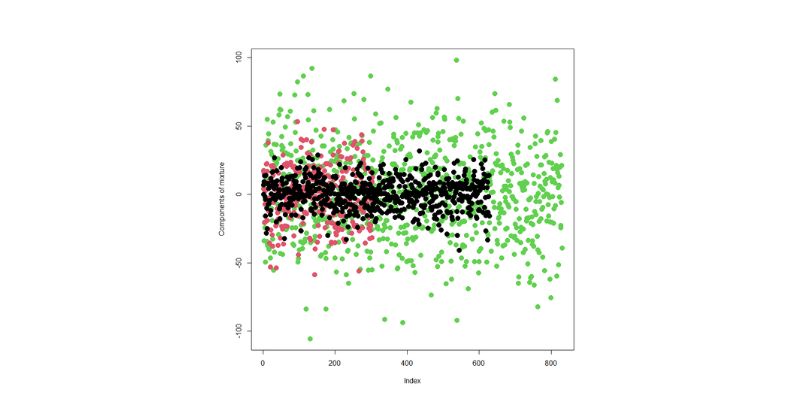

Learn how integrating feature selection into model estimation improves accuracy, reduces noise, and boosts efficiency in ML

How IBM and L’Oréal are leveraging generative AI for cosmetics to develop safer, sustainable, and personalized beauty solutions that meet modern consumer needs

Learn how to install, configure, and run Apache Flume to efficiently collect and transfer streaming log data from multiple sources to destinations like HDFS

Writer unveils a new AI platform empowering businesses to build and deploy intelligent, task-based agents.

How does an AI assistant move from novelty to necessity? OpenAI’s latest ChatGPT update integrates directly with Microsoft 365 and Google Workspace—reshaping how real work happens across teams

Nvidia is set to manufacture AI supercomputers in the US for the first time, while Deloitte deepens agentic AI adoption through partnerships with Google Cloud and ServiceNow

How serverless GPU inference is transforming the way Hugging Face users deploy AI models. Learn how on-demand, GPU-powered APIs simplify scaling and cut down infrastructure costs

An AI health care company is transforming diagnostics by applying generative AI in radiology, achieving a $525M valuation while improving accuracy and supporting clinicians

How Edge AI is reshaping how devices make real-time decisions by processing data locally. Learn how this shift improves privacy, speed, and reliability across industries