Advertisement

Image classification is one of the most common tasks in computer vision. The CIFAR-10 dataset has been a standard benchmark because it contains 60,000 color images spread across ten clear categories like airplane, automobile, bird, and cat. Each image is small, just 32×32 pixels, yet diverse enough to make the challenge meaningful.

A Convolutional Neural Network (CNN) is highly effective for classifying these images. CNNs can recognize patterns in visual data by mimicking how humans perceive shapes and textures. This article walks through building, training, and testing a CNN to classify CIFAR-10 images clearly and thoroughly.

The CIFAR-10 dataset includes 50,000 training images and 10,000 test images, equally distributed among ten categories. Each is a 32×32 color image with three channels — red, green, and blue. Its size and quality make it accessible yet challenging enough to evaluate how well an image classifier performs.

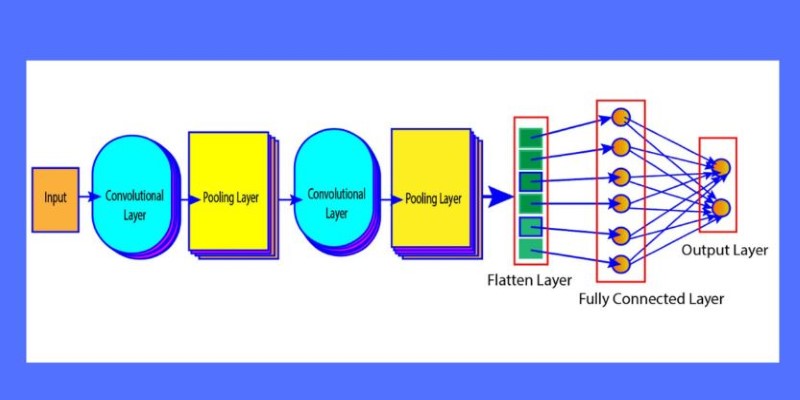

CNNs work well for image tasks because they capture spatial hierarchies of patterns, starting with edges and textures, then combining them into more complex shapes. A typical CNN consists of convolutional layers, pooling layers, and fully connected layers. Convolutional layers slide small filters across the image to detect features. Pooling layers reduce the dimensions of these feature maps while keeping the most significant information. Finally, fully connected layers take this extracted information and produce predictions. CNNs are particularly suited to handle the compact, low-resolution images in CIFAR-10 because they can still extract meaningful features from limited pixel data.

To classify CIFAR-10 images with a CNN, start by loading the dataset. Libraries such as TensorFlow and PyTorch provide built-in access to CIFAR-10, which saves setup time. Normalizing the pixel values to fall between 0 and 1 by dividing by 255 makes the model train more effectively. Splitting part of the training set into a validation set allows you to measure how the model generalizes during training.

The next step is designing the CNN architecture. A good starting point is a simple yet effective layout. Begin with a convolutional layer using 32 filters of size 3×3 with ReLU activation, followed by a pooling layer to downsample the output. Adding another pair of convolutional and pooling layers helps capture more complex features. After these layers, flatten the output and connect it to one or more fully connected layers, ending with a softmax layer to output probabilities for the ten classes.

You can improve this architecture using techniques like dropout, which disables some neurons during training to prevent overfitting. Batch normalization is also helpful for stabilizing and speeding up training. Once the architecture is set, compile the model using a loss function suited for classification, like categorical crossentropy, and an optimizer like Adam, which adjusts the learning rate dynamically.

Training the model involves feeding it batches of images for several epochs. During each epoch, the model updates its weights to minimize the loss on the training data. After each epoch, check performance on the validation set to monitor progress. Once training finishes, test the model on the reserved test set to see how well it performs in practice.

Even a simple CNN achieves decent accuracy on CIFAR-10, but there are ways to improve results. Data augmentation is one of the most effective approaches. The CIFAR-10 dataset is relatively small, which can cause overfitting. To reduce this risk, artificially expand the dataset by applying random changes to the images during training, such as rotations, flips, shifts, and zooms. This gives the model more variety and helps it generalize better.

Another way to improve accuracy is to adjust the depth and width of the CNN. Adding more convolutional layers or increasing the number of filters lets the network learn more detailed patterns. However, deeper models require more computation and can overfit if not managed properly. Well-known architectures like VGG or ResNet, which are deeper and more advanced, often outperform simpler designs on CIFAR-10 if you have the resources to train them.

Learning rate schedules are another improvement method. Instead of keeping the learning rate fixed, gradually decreasing it as training continues can help the model converge on better weights. Examining a confusion matrix after testing is useful for understanding which categories the model struggles with most. This feedback can guide further tuning of the architecture or training process.

After training and tuning, test the CNN on the CIFAR-10 test set of 10,000 images to measure unbiased performance. Accuracy is the most common metric, but precision, recall, and F1-score provide more detailed insight, especially if some categories are harder to classify. Basic CNNs usually reach around 70–80% accuracy, while deeper architectures can improve on this significantly.

Once tested, save the trained model so it can be used to classify new images. Standard formats like HDF5 or ONNX make it easy to load the model later for predictions. Deploying the model can be done locally or through a web or cloud service, depending on your application.

Monitoring the deployed model over time is a good practice. Real-world data may differ from the training data, which can lead to reduced accuracy. Retraining with more diverse or updated examples helps maintain performance.

Classifying images from the CIFAR-10 dataset with a CNN is a practical way to understand and apply image recognition methods. The dataset offers a balance of simplicity and challenge, making it suitable for learning and experimentation. CNNs handle the task well by identifying patterns and combining them into meaningful predictions. Building a clear model, training it carefully, and using techniques to improve generalization all contribute to better results. Testing on new data shows how reliable the model is outside the lab. With thoughtful design and practice, classifying CIFAR-10 images can teach you a lot about how machines learn to see.

Advertisement

Get full control over Python outputs with this clear guide to mastering f-strings in Python. Learn formatting tricks, expressions, alignment, and more—all made simple

Learn how to compare two regression models with statistical significance for accuracy, reliability, and better decision-making

How AI is changing the future of work, who controls its growth, and the hidden role venture capital plays in shaping its impact across industries

Looking for the best way to merge two lists in Python? This guide walks through ten practical methods with simple examples. Whether you're scripting or building something big, learn how to combine lists in Python without extra complexity

What's fueling the wave of tech layoffs in 2025, from overhiring during the pandemic to the rise of AI job disruption and shifting investor demands

Curious how LLMs learn to write and understand code? From setting a goal to cleaning datasets and training with intent, here’s how coding models actually come together

What's changing inside your car? A new AI platform is making in-car assistants smarter, faster, and more human-like—here's how it works

Explore the different Python exit commands including quit(), exit(), sys.exit(), and os._exit(), and learn when to use each method to terminate your program effectively

Can AI really help a Formula One team build faster, smarter cars? With real-time data crunching, simulation, and design automation, teams are transforming racing—long before the track lights go green

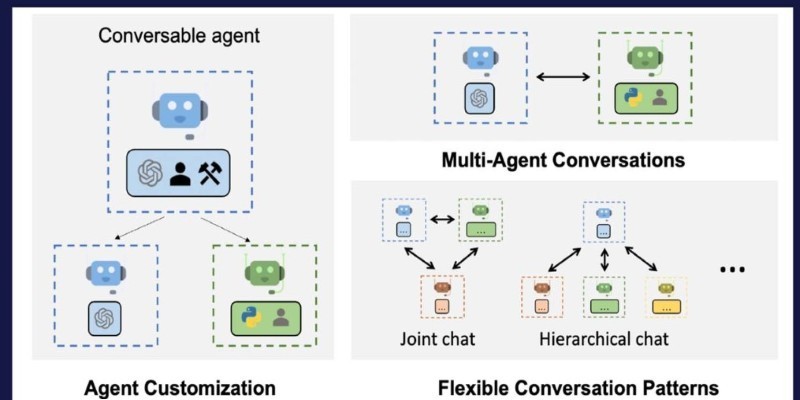

How Building Multi-Agent Framework with AutoGen enables efficient collaboration between AI agents, making complex tasks more manageable and modular

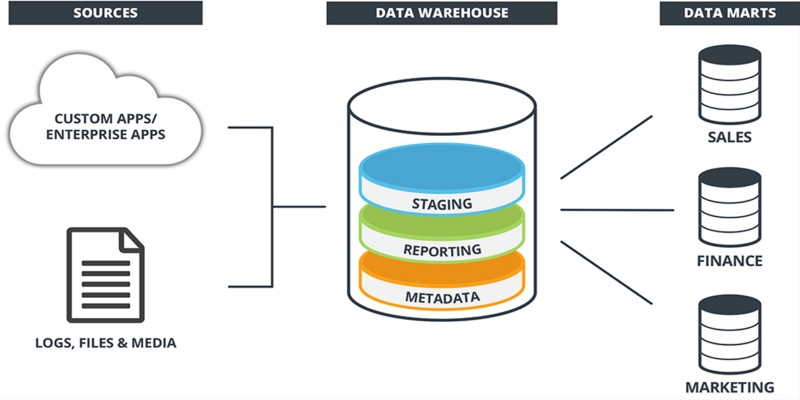

Learn what a data warehouse is, its key components like ETL and schema designs, and how it helps businesses organize large volumes of data for fast, reliable analysis and decision-making

Sam Altman returns as OpenAI CEO amid calls for ethical reforms, stronger governance, restored trust in leadership, and more