Advertisement

Artificial intelligence has grown so complex that traditional computing often struggles to keep pace. Quantum computing is offering a way forward. IonQ, a leader in trapped-ion quantum technology, is pushing this frontier by developing quantum-enhanced applications, particularly in AI. By blending quantum hardware with machine learning techniques, IonQ shows how AI systems can process data more efficiently, solve optimization tasks faster, and detect patterns that classical machines often miss. This integration of quantum and AI promises to expand what these technologies can accomplish together and bring fresh solutions to long-standing computational challenges.

IonQ’s technology is built on trapped ions acting as quantum bits, or qubits. These qubits are more stable and precise than many other quantum designs. Such reliability is critical when applying quantum to AI, where computations involve enormous search spaces and intricate optimization. These are areas where classical supercomputers can struggle or require prohibitive time and energy.

Quantum computers can process many possible solutions simultaneously through superposition and entanglement. IonQ’s improved hardware, offering more qubits and better connectivity, makes it possible to run algorithms with practical results. Techniques such as quantum support vector machines, quantum principal component analysis, and quantum generative models have already been demonstrated on IonQ machines, showing early advantages for tasks like classification and clustering on high-dimensional datasets.

A significant part of IonQ’s contribution is making this technology widely accessible through cloud platforms. Developers and researchers can experiment with quantum-enhanced machine learning without needing to maintain the hardware. This lowers barriers to incorporating quantum computing into AI workflows, allowing for more rapid experimentation and development of applications.

The combined use of quantum computing and AI is already showing promise across several industries. In finance, quantum machine learning helps optimize investment portfolios by analyzing large market datasets more effectively. Healthcare is testing quantum-enhanced models to accelerate drug discovery and improve diagnostic accuracy by identifying patterns in complex biological data that classical tools may overlook.

In logistics and transportation, IonQ’s systems have been used to design more efficient routing and scheduling, handling combinatorial problems faster than traditional algorithms. This has the potential to reduce costs and improve service reliability. Energy companies are exploring quantum-enhanced applications for managing electricity grids and renewable energy flows, enabling more accurate forecasts and balancing supply with demand.

Natural language processing is another area beginning to benefit. Early research into quantum-enhanced NLP shows it can improve how language models recognize and generate text, cutting down training times and boosting accuracy as datasets grow in size and complexity. These results suggest that as quantum hardware improves, it will continue to open up new kinds of AI-driven applications, including those in climate research, autonomous systems, and security.

What stands out about IonQ’s progress is that quantum computing does not replace classical systems but works alongside them. Hybrid approaches—where parts of a computation are handled by classical processors and others by quantum circuits—are proving the most effective path for now.

The progress so far has not been without challenges. Scaling the number of qubits while maintaining coherence and minimizing errors remains an engineering challenge. AI tasks demand consistent, trustworthy outputs, so reducing noise and developing error correction techniques remain key goals.

IonQ continues to improve gate fidelity and apply smarter error-mitigation software to address these issues. Trapped-ion qubits already hold an advantage in terms of stability, but maintaining quality as systems grow remains a careful balancing act.

Another challenge is adapting existing AI algorithms for quantum hardware. Many conventional models need to be rewritten or redesigned to take advantage of quantum properties. Hybrid systems have become a practical answer here, allowing developers to gradually integrate quantum techniques without abandoning established methods.

IonQ is also working to educate developers and build a wider community familiar with this technology. Training researchers to develop and deploy quantum-enhanced AI applications is a crucial step toward making these systems more usable and impactful in various fields.

IonQ’s progress suggests that quantum-enhanced applications are no longer limited to theory. Early demonstrations have already shown that real-world AI problems can benefit from quantum acceleration. As the technology matures, more businesses and researchers are expected to adopt hybrid approaches to tackle data-intensive tasks and optimization problems more effectively.

The accessibility of IonQ’s machines through the cloud means that quantum-enhanced tools are within reach for companies of all sizes, not just well-funded research labs. As more industries experiment with the technology, new applications are likely to emerge, including ones we can’t yet fully predict.

By focusing on hybrid systems, improving hardware, and creating user-friendly platforms, IonQ is showing how quantum-enhanced AI can be practical and impactful even before fully fault-tolerant quantum computers arrive. The benefits may start small, but they are building momentum and demonstrating that quantum computing can improve how AI learns and makes decisions.

The future promises more advanced applications across medicine, finance, logistics, and beyond, showing that quantum and AI together can solve problems that would overwhelm classical methods alone. IonQ’s work makes it clear that this is not a distant dream, but a technology that is beginning to shape what comes next.

IonQ is showing that quantum computing can already make a difference in tackling AI’s biggest challenges. Its trapped-ion systems bring stability and accuracy to quantum-enhanced applications, making them practical for solving data-heavy and optimization-focused tasks. By combining accessible cloud-based platforms with ongoing hardware improvements, IonQ is helping researchers and businesses integrate quantum techniques into AI today. As both the hardware and algorithms continue to evolve, IonQ is setting a foundation for quantum and AI to work together more closely, creating smarter, more efficient ways to analyze and act on information. The possibilities ahead are wide and promising.

Advertisement

How a machine learning algorithm uses wearable technology data to predict mood changes, offering early insights into emotional well-being and mental health trends

Achieve lightning-fast SetFit Inference on Intel Xeon processors with Hugging Face Optimum Intel. Discover how to reduce latency, optimize performance, and streamline deployment without compromising model accuracy

How benchmarking text generation inference helps evaluate speed, output quality, and model inference performance across real-world applications and workloads

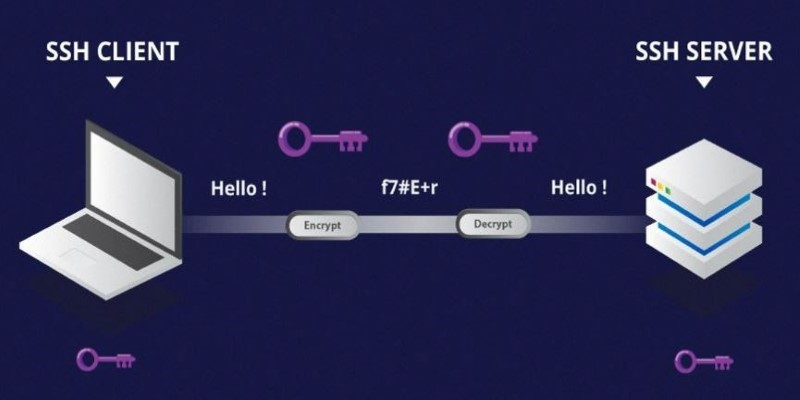

Learn the difference between SSH and Telnet in cyber security. This article explains how these two protocols work, their security implications, and why SSH is preferred today

Can small AI agents understand what they see? Discover how adding vision transforms SmolAgents from scripted tools into adaptable systems that respond to real-world environments

AI saved Google from facing an antitrust breakup, but the trade-offs raise questions. Explore how AI reshaped Google’s future—and its regulatory escape

Watsonx AI bots help IBM Consulting deliver faster, scalable, and ethical generative AI solutions across global client projects

Accelerate AI with AWS GenAI tools offering scalable image creation and model training using Bedrock and SageMaker features

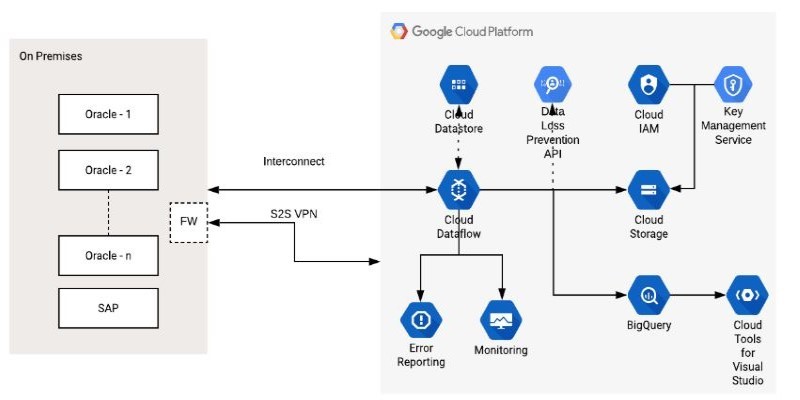

How the Google Cloud Dataflow Model helps you build unified, scalable data pipelines for streaming and batch processing. Learn its features, benefits, and connection with Apache Beam

How AI middle managers tools can reduce administrative load, support better decision-making, and simplify communication. Learn how AI reshapes the role without replacing the human touch

Discover the best Artificial Intelligence movies that explore emotion, identity, and the blurred line between humans and machines. From “Her” to “The Matrix,” these classics show how AI in cinema has shaped the way we think about technology and humanity

Know how AI transforms Cybersecurity with fast threat detection, reduced errors, and the risks of high costs and overdependence