Advertisement

Nvidia NeMo Guardrails provides a smart solution to growing concerns about the safety of AI chatbots. Safety, trust, and bias concerns follow closely as AI capabilities expand. AI bots must provide accurate, polite, and objective information to users. Nvidia's NeMo Guardrails framework helps control AI behavior by setting safety limits. It monitors conversations and prevents bots from giving harmful or false responses.

Filtering dangerous material and enforcing usage guidelines help people trust AI-powered products. Tools like NeMo Guardrails make it possible to address trust issues with AI bots effectively. Responsible AI use now relies on tools like NeMo Guardrails as a foundational necessity.

AI chatbots are increasingly used in customer service, education, and healthcare. This widespread adoption makes safe and reliable AI interactions critically important. Many bots respond based on data patterns, which may contain bias or misinformation. That raises public concerns about the reliability of AI. Toxic statements or inaccurate responses could destroy user confidence. Developers are actively seeking tools that can prevent such issues from occurring. Nvidia NeMo Guardrails were designed to address these concerns. It establishes strong protective guidelines to ensure safer user interactions.

Businesses using artificial intelligence bots seek to avoid brand harm, user anger, or lawsuits. Ensuring ethical AI behavior is now a shared responsibility. NeMo Guardrails and other tools that direct artificial intelligence behavior provide a clear road forward. These solutions enable smarter, safer, and more effective communication. Companies today understand that trust is fundamental for long-term artificial intelligence success; it is not optional.

Nvidia NeMo Guardrails is a powerful program for controlling artificial intelligence chatbot behavior. It establishes conversational limits that ensure responses stay within ethical and safe spheres. It helps developers specify an artificial intelligence machine's limitations, which implies blocking offensive material, dangerous advice, or false facts. Guardrails enable artificial intelligence bots to remain committed to their intended purpose. Nvidia developed it inside the bigger NeMo architecture, emphasizing conversational artificial intelligence.

The tool helps with subject guidelines, including legality, appropriateness, and factual correctness. Users can customize it according to their requirements. It crosses popular big language models and cloud platforms. Real-time filtering methods in guardrails help identify dangerous materials. The technique also keeps bots from pursuing off-target or illegal topics. These artificial intelligence chatbot safety precautions ensure consumers get accurate, safe responses. That lays a closer basis for confidence and acceptance of artificial intelligence technologies.

Dependability is paramount when consumers interact with AI bots for information, assistance, or advice. Nvidia: By controlling what the bot can say, NeMo Guardrails increases AI dependability. It filters wrong or pointless responses. Bots move in a straight line guided by pre-defined rules. That ensures their dependability and predictability. Guardrails use pattern-matching filters to find unwelcome material. Those filters disable the bot from delivering the dangerous message if triggered.

Developers can tune the system to fit their industry norms or audience. It produces a more under-control, steady communication experience. Reducing randomness in AI responses gives consumers confidence. NeMo also helps to ensure that artificial intelligence does not make unfounded assertions. A trustworthy bot cannot assume anything; it must follow known facts. The right guidelines help AI helpers be more consistent. Building confidence with artificial intelligence bots hinges on their consistency in accuracy and politeness during interactions.

Many worries about artificial intelligence bots center on harmful material, poisonous speech, or bias. Through content limitations, Nvidia NeMo Guardrails helps prevent these issues. It creates filters to grab and block offensive or delicate language. When training data incorporates prejudices, bias might seep into artificial intelligence answers. Guardrails help eliminate these hazards from bot contacts. Developers can specify safe areas for dialogue. Guardrails stop the conversation if a user guides the bot into dangerous areas. This security guards consumers and companies from bad encounters.

Furthermore, lessening the possibility of inadvertent discrimination or false information is the tool. Following consistent response criteria helps artificial intelligence bots to get safer. Every sector has varying degrees of sensitivity; Guardrails change with that. The protection of several user groups depends on customizing. Reducing harm and bias builds user confidence and enjoyment. Safety precautions for artificial intelligence chatbots work best when solutions like NeMo Guardrails run behind the scenes constantly.

Every business uses AI bots for different purposes. Nvidia NeMo Guardrails makes full rule and filter customization possible. Companies can create particular dos and don'ts policies for AI interactions, which ensures adherence to industry standards or corporate policy compliance. Legal counsel, medical opinions, or financial guidance can all be blocked using guardrails. Companies in delicate sectors can add extra levels of security. Guardrails also ensure bots remain within brand tone and voice.

Clear content limits help businesses lower public reaction risk. The tool makes integration straightforward with well-known artificial intelligence systems. Guardrails also provide audit logs, enabling teams to gradually enhance rule performance. Customer satisfaction depends on the consistency of bot communications. Trust rises when machines kindly and accurately respond every time. Guardrails and other AI chatbot safety precautions help promote long-term user involvement. Custom guardrails also enable companies to create a strong digital brand experience.

Most development teams find it easy to include Nvidia NeMo Guardrails into AI systems. The tool supports LangChain, cloud APIs, and Python, among other languages. First, developers build discussion flows and tag limited subjects. They then let the bot operate under several conditions. Guardrails apply to frontend and backend operations alike, ensuring a complete circle of safety. The framework's efficient running in real time ensures that consumers never suffer delays. To expedite setup, Nvidia also offers example code and documentation.

Integration entails charting user objectives, possible hazards, and chatbot limitations. Teams should routinely change guardrail settings depending on new information or user comments. Maintaining AI behavior consistent with brand guidelines is vital. From banking to e-commerce, guardrails give flexibility to manage several usage scenarios. Early integration of Guardrails helps teams prevent costly mistakes later. Trust develops when artificial intelligence tools are secure and goal-oriented from the beginning.

Nvidia NeMo Guardrails strengthen trust between users and AI by enabling safer chatbot interactions. It filters bias, prevents harmful responses, and improves reliability. AI chatbot safety has taken center stage in today's digital landscape. Companies and developers now rely on solutions like Guardrails to enforce ethical standards. More user confidence and long-term success follow from safe and accurate artificial intelligence. Through developing trust with artificial intelligence bots, NeMo Guardrails shapes the direction of ethical artificial intelligence.

Advertisement

Discover the exact AI tools and strategies to build a faceless YouTube channel that earns $10K/month.

Learn how to compare two regression models with statistical significance for accuracy, reliability, and better decision-making

How does Docmatix reshape document understanding for machines? See why this real-world dataset with diverse layouts, OCR, and multilingual data is now essential for building DocVQA systems

Know how AI transforms Cybersecurity with fast threat detection, reduced errors, and the risks of high costs and overdependence

How the Model-Connection Platform (MCP) helps organizations connect LLMs to internal data efficiently and securely. Learn how MCP improves access, accuracy, and productivity without changing your existing systems

How benchmarking text generation inference helps evaluate speed, output quality, and model inference performance across real-world applications and workloads

How IBM expands AI features for the 2025 Masters Tournament, delivering smarter highlights, personalized fan interaction, and improved accessibility for a more engaging experience

How using open-source AI models can give your startup more control, lower costs, and a faster path to innovation—without relying on expensive black-box systems

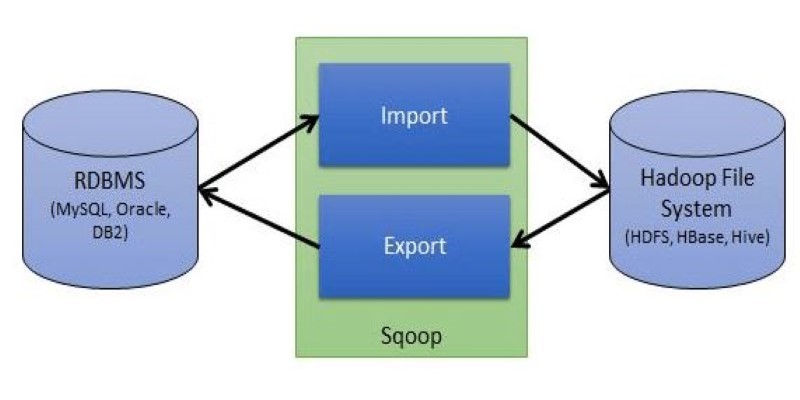

Explore Apache Sqoop, its features, architecture, and operations. Learn how this tool simplifies data transfer between Hadoop and relational databases with speed and reliability

How can vision-language models learn to respond more like people want? Discover how TRL uses human preferences, reward models, and PPO to align VLM outputs with what actually feels helpful

Struggling to connect tables in SQL queries? Learn how the ON clause works with JOINs to accurately match and relate your data

How MPT-7B and MPT-30B from MosaicML are pushing the boundaries of open-source LLM technology. Learn about their architecture, use cases, and why these models are setting a new standard for accessible AI