Advertisement

AI is transforming Cybersecurity by reshaping digital defense strategies across industries. Security teams now use artificial intelligence for rapid incident response and efficient threat detection. These systems analyze vast data streams to identify patterns that humans might miss. AI-based security solutions help forecast potential breaches, reduce manual effort, and boost productivity.

However, these benefits come with notable challenges. From ethical concerns to high implementation costs, using AI in security systems demands careful evaluation. This article outlines AI's primary advantages and disadvantages in Cybersecurity to help decision-makers assess both sides. Whether improving threat intelligence or navigating deployment challenges, organizations must weigh AI's role carefully to make informed, long-term decisions.

Below are some of the advantages of AI in Cybersecurity:

AI offers threat detection speeds unmatched by traditional tools. It analyzes real-time traffic and behavioral patterns to detect anomalies. It immediately flags irregularities such as phishing attempts, brute-force attacks, or malware activity. These notifications enable teams to act fast, hence reducing damage. Fast identification limits access to sensitive data and lessens the effects of breaches. AI systems are continually updated with new threat intelligence. This implies that they are picking up fast-changing technologies, strategies, and approaches. Their dynamic character helps them to be current and proactive. Advanced AI monitoring can even detect previously unknown zero-day vulnerabilities. Times of response also get better. Automated containment—isolating endpoints or closing ports—also becomes faster. Security efforts grow more nimble. AI enhances detection and response capabilities without compromising performance or requiring constant manual checks.

Through false positive filtering, AI-based security solutions lower alert fatigue. Traditional systems often generate routine alerts that overwhelm security teams. Security personnel have to look at this noise they produce manually. However, AI uses behavioral baselines and contextual analysis to detect unusual activity. More intelligent filtering ensures that actual threats aren't buried behind pointless alarms. Teams can thus concentrate on real hazards instead of wasting time on false flags. Machine learning models analyze trends across systems and improve accuracy over time. These models factor in time-based anomalies, user behavior, and access frequency. This method lets security experts concentrate on what is important. Procedures start to go smoothly. Resources are used more efficiently. In Cybersecurity, artificial intelligence thinks smarter than it just detects faster. Less false positives translate into faster resolution and increased system confidence. In high-pressure cybersecurity environments, this reliability makes AI an asset rather than a liability.

AI offers round-the-clock defense. Unlike human teams needing downtime, artificial intelligence systems never stop running. This non-stop surveillance ensures continuous awareness of devices, systems, and networks. Every odd behavior is reported immediately, including at two in the morning. Still, another important virtue is scalability. AI-based security systems process massive amounts of data without stopping. IoT, mobile, and cloud technologies create continuous signals; artificial intelligence manages them all. AI scales effortlessly, whether an organization has 50 or 50,000 endpoints. Organizations gain from consistency as well. With artificial intelligence, human tiredness or oversight is not relevant. Every file, login, or packet is examined in the same way. AI systems fit very well with SIEM tools, firewalls, and threat feeds. It builds a consistent, responsive protection layer. In Cybersecurity, artificial intelligence ensures wide coverage without raising personnel or expenses. As a result, organizations achieve stronger security across growing digital infrastructures.

Below are some of the disadvantages of AI in Cybersecurity.

The development or upkeep of AI-based security systems is not inexpensive. Building AI-driven threat detection systems requires advanced hardware, software, and skilled professionals. Many small businesses find these expenses unaffordable. The first setup calls for gathering enormous amounts of data for training purposes. Maintaining the current systems requires ongoing access to fresh data sources and threat feeds. It raises the continuous maintenance activities. Technical intricacy presents an additional challenge. Either overlooked dangers or alarms that are too frequent can result from improperly set up artificial intelligence. Human control is still absolutely vital, even with automation. Teams want professionals with artificial intelligence literacy who can understand system outputs. Many times, IT departments lack the competencies needed. Integration with outdated systems brings further difficulties. Not every organization is suited for AI-enabled cybersecurity solutions. Inappropriate planning could cause the investment to provide less than desired outcomes. For many, the high cost and technological obstacles balance the possible benefits of artificial intelligence for threat detection.

AI models are targets themselves. Hackers fool machine learning systems with adversarial methods. They falsify input data, therefore misleading artificial intelligence into making bad choices. Malware might be hidden, for instance, to seem innocuous and avoid discovery. Once misled, an artificial intelligence machine may ignore warnings or behave in error. Attackers might even research a company's defenses using artificial intelligence to create custom attacks. These are advanced and hazardous sorts of cyberattacks. Artificial intelligence learns from data, so feeding it poisoned or biased inputs during training can have long-lasting effects. The system might eventually turn useless or support erroneous threat models. Constant oversight of artificial intelligence in cybersecurity defense helps to prevent these manipulations. Otherwise, the tools used to guard systems could become security liabilities.

Overreliance on artificial intelligence poses significant risks. If decision-makers depend entirely on AI, they may neglect traditional security measures and human oversight. Critical errors can go unnoticed for extended periods without active human involvement, increasing the potential for serious breaches. AI cannot fully grasp context in the way humans do. It might highlight or overlook behavior without regard for purpose or surroundings. Still, humans need critical thought and ethical judgment. Should the system fail, human teams acting quickly will determine recovery. Additionally, less transparent are black-box models. Security teams might not completely comprehend how artificial intelligence gets to its decisions. It makes system improvement over time difficult, as well as audits. AI-based security solutions should support, not replace, human judgment. Organizations run the danger of being unprepared should artificial intelligence underperform or fail without balance. Although artificial intelligence is strong in threat identification, it requires human intuition to be dependable and safe.

In Cybersecurity, artificial intelligence provides quick, scalable, intelligent screening to enhance defense plans. Early attack identification by artificial intelligence for threat detection saves time, improves incident response, and helps to identify vulnerabilities. However, AI-based systems require significant investment, skilled teams, and vigilant oversight to prevent misuse. Their dependability could be compromised by over-dependence or aggressive manipulation. Organizations should collaborate with AI rather than rely on it exclusively. The best outcomes emerge from matching human oversight with AI capabilities. The careful application ensures that security remains smart, flexible, and strong in an always-shifting digital threat environment.

Advertisement

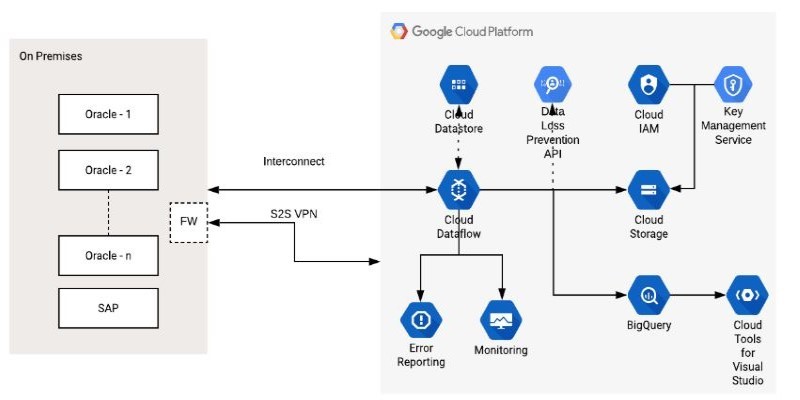

How the Google Cloud Dataflow Model helps you build unified, scalable data pipelines for streaming and batch processing. Learn its features, benefits, and connection with Apache Beam

Writer unveils a new AI platform empowering businesses to build and deploy intelligent, task-based agents.

Watsonx AI bots help IBM Consulting deliver faster, scalable, and ethical generative AI solutions across global client projects

AI-first devices are reshaping how people interact with technology, moving beyond screens and apps to natural, intelligent experiences. Discover how these innovations could one day rival the iPhone by blending convenience, emotion, and AI-driven understanding into everyday life

Nvidia is set to manufacture AI supercomputers in the US for the first time, while Deloitte deepens agentic AI adoption through partnerships with Google Cloud and ServiceNow

Discover the exact AI tools and strategies to build a faceless YouTube channel that earns $10K/month.

Is the future of U.S. manufacturing shifting back home? Siemens thinks so. With a $190M hub in Fort Worth, the company is betting big on AI, automation, and domestic production

Discover the latest machine learning salary trends shaping 2025. Explore how experience, location, and industry impact earnings, and learn why AI careers continue to offer strong growth and global opportunities for skilled professionals

IBM showcased its agentic AI at RSAC 2025, introducing a new approach to autonomous security operations. Learn how this technology enables faster response and smarter defense

Ready to make computers see like humans? Learn how to get started with OpenCV—install it, process images, apply filters, and build a real foundation in computer vision with just Python

Can AI really help a Formula One team build faster, smarter cars? With real-time data crunching, simulation, and design automation, teams are transforming racing—long before the track lights go green

How IBM and L’Oréal are leveraging generative AI for cosmetics to develop safer, sustainable, and personalized beauty solutions that meet modern consumer needs