Advertisement

Voice assistants inside cars have come a long way since the early days of clunky command recognition. But for most drivers, they still feel limited—too robotic, too rigid, too frustrating. That's changing. A new AI company has launched a platform that reimagines how these systems operate from the ground up. This isn't about adding more features.

It's about creating better interactions—where your in-car assistant doesn’t just answer, but understands. The platform’s goal is to bring natural conversation and real-time context to voice-enabled driving, making it feel less like talking to a gadget and more like speaking with a helpful co-pilot.

Most in-car assistants today are built on predefined sets of commands. You say the right phrase, and the assistant responds—if you're lucky. But this new platform relies on generative AI to interpret language more fluidly. Instead of expecting exact phrasing, it tries to grasp intent. This means drivers can speak naturally: "Can you find a quiet coffee place nearby?" gets a smarter, more filtered answer than the typical GPS search. The system also picks up on patterns over time—what the driver likes, when they're leaving for work, and how they prefer their music or temperature settings—and starts tailoring responses accordingly.

The key innovation is its layered architecture. On one level, the system handles direct instructions. Additionally, it utilizes large language models to process nuance. And behind it all is an edge AI system that operates offline when needed, keeping latency low and ensuring voice controls continue to function in areas with weak connectivity. That's critical for reliability, especially on highways or remote roads.

Context is often where current assistants fall flat. They forget what you said two minutes ago, or they can’t connect your music request with your mood or driving conditions. This new platform adds persistent context. It keeps track of the conversation—where you’ve been, what you’ve asked—and adapts its answers based on that thread. If you ask about parking after searching for restaurants, it understands you’re probably arriving soon and offers suggestions near the destination.

It also taps into other vehicle sensors to gather information. Is it raining? The assistant can suggest indoor spots or warn about slippery roads. Is the fuel low? It can recommend a gas station along your current route, not just the nearest one. This sensor integration isn’t just for convenience—it’s part of how the platform builds relevance into its responses. And by merging visual input from the dashboard or mobile screen with voice cues, it supports multimodal interaction. A driver can say “Show me alternate routes,” and get visual suggestions instantly on the screen, no tapping required.

The growth of AI-powered in-car assistants raises privacy concerns. Voice data, location, driving habits—these are sensitive pieces of information. This platform addresses that directly. Much of the processing happens on the vehicle itself, using edge computing. That means voice recordings and behavior data stay local unless explicitly shared. Drivers can choose whether to sync their preferences across vehicles or keep everything isolated. That choice matters, especially as vehicles become more connected and car manufacturers partner with cloud platforms.

Offline functionality is another strong point. The assistant doesn't just shut down when the car's out of signal range. Core features, such as navigation, media control, and routine queries, remain functional thanks to pre-trained models running locally. This is where the platform stands out—by blending cloud access with independent capability. It gives drivers flexibility without sacrificing responsiveness.

Several automakers and mobility startups are already integrating the platform into upcoming models. Most are using it not as a full voice operating system, but as an enhancement layer on top of their existing software. That means it works with legacy infotainment systems as long as they support API-level access. The AI company behind the platform is also working with navigation providers and streaming services to streamline how information is passed between apps and the assistant.

Beyond personal vehicles, there's interest from ride-hailing services and fleet operators. For them, the assistant could act as a voice layer for passengers—offering route updates, music control, or even answering questions about the trip—all without a human driver needing to intervene. This shift toward broader, more natural AI interactions inside vehicles mirrors the trend seen in homes and phones, where assistants are expected to adapt to the user, not the other way around.

The platform's roadmap includes multilingual support, emotional tone recognition, and even potential integrations with driver wellness tools. Imagine an assistant who notices you're tired—based on slower reaction times or repeated yawns—and suggests a rest stop. That's the direction this technology is heading, and while not every feature is live yet, the infrastructure is now in place.

The launch of this platform isn’t just about better voice commands—it’s about rethinking how humans and machines interact inside cars. It pushes in-car AI from a gimmick toward something more intuitive, something that learns and adjusts as you drive. As the line between vehicle software and driver behavior continues to blur, having a system that can keep up in real time becomes more than a feature—it becomes part of the driving experience. Whether it's suggesting smarter routes, playing the right kind of music, or simply understanding what you meant without repeating yourself, this new approach signals a quieter but significant shift in how we interact with the technology around us. By giving in-car AI the ability to understand nuance, respond with relevance, and function without full reliance on the cloud, the platform sets a new bar. It doesn't just talk back—it listens better. And for drivers tired of clunky commands and robotic replies, that’s a welcome shift.

Advertisement

What's fueling the wave of tech layoffs in 2025, from overhiring during the pandemic to the rise of AI job disruption and shifting investor demands

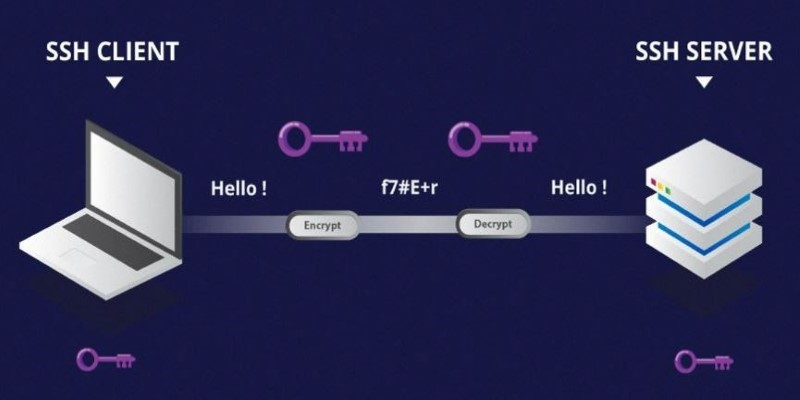

Learn the difference between SSH and Telnet in cyber security. This article explains how these two protocols work, their security implications, and why SSH is preferred today

How IBM and L’Oréal are leveraging generative AI for cosmetics to develop safer, sustainable, and personalized beauty solutions that meet modern consumer needs

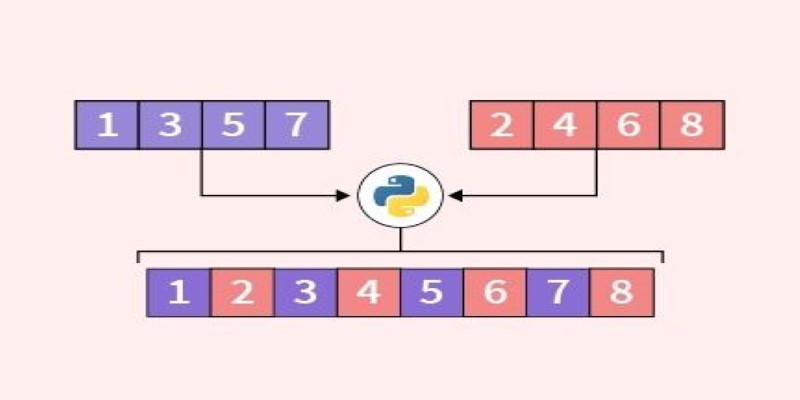

Looking for the best way to merge two lists in Python? This guide walks through ten practical methods with simple examples. Whether you're scripting or building something big, learn how to combine lists in Python without extra complexity

How IonQ advances AI capabilities with quantum-enhanced applications, combining stable trapped-ion technology and machine learning to solve complex real-world problems efficiently

Discover the best Artificial Intelligence movies that explore emotion, identity, and the blurred line between humans and machines. From “Her” to “The Matrix,” these classics show how AI in cinema has shaped the way we think about technology and humanity

Get full control over Python outputs with this clear guide to mastering f-strings in Python. Learn formatting tricks, expressions, alignment, and more—all made simple

How does Docmatix reshape document understanding for machines? See why this real-world dataset with diverse layouts, OCR, and multilingual data is now essential for building DocVQA systems

Can a small language model actually be useful? Discover how SmolLM runs fast, works offline, and keeps responses sharp—making it the go-to choice for developers who want simplicity and speed without losing quality

An AI startup has raised $1.6 million in seed funding to expand its practical automation tools for businesses. Learn how this AI startup plans to make artificial intelligence simpler and more accessible

How serverless GPU inference is transforming the way Hugging Face users deploy AI models. Learn how on-demand, GPU-powered APIs simplify scaling and cut down infrastructure costs

How MPT-7B and MPT-30B from MosaicML are pushing the boundaries of open-source LLM technology. Learn about their architecture, use cases, and why these models are setting a new standard for accessible AI