Advertisement

At CES 2025, Hyundai and Nvidia announced a collaboration that puts artificial intelligence at the center of future mobility. Rather than focusing only on faster vehicles or flashier dashboards, the partnership aims to redefine how transportation interacts with people and their surroundings. Using Nvidia’s AI computing technology, Hyundai plans to create smarter, safer, and more intuitive systems that can adapt to real-world needs. This joint initiative signals a shift from vehicles being passive machines to becoming intelligent partners in daily life, offering not just convenience but deeper integration with how people live and move.

Hyundai’s Future Mobility Program, showcased at CES 2025, represents more than an upgrade to car technology. It reimagines mobility itself by weaving AI into the decision-making and interaction layers of vehicles. With Nvidia’s advanced processors and AI platforms, Hyundai is building systems capable of learning from data, predicting driver preferences, and responding to complex traffic and environmental conditions in real time.

This approach moves beyond simple automation. For example, vehicles can adapt their driving style based on passengers’ moods, the time of day, or specific routes. Hyundai showed prototypes that could adjust climate, lighting, and even suggested stops based on an AI’s understanding of the occupants. The Future Mobility Program also explores AI in shared transportation and delivery fleets, where efficiency and real-time adaptability are critical.

For Nvidia, this partnership extends its leadership in AI computing to the automotive space. Its Drive platform powers many of the functions Hyundai envisions, including sensor fusion, perception, and natural language processing. Hyundai’s designers and engineers are embedding these capabilities not just in premium vehicles but also planning to bring them into mainstream models over the next few years.

A significant part of Hyundai’s and Nvidia’s vision is focused on safety that feels natural and supportive rather than intrusive. AI-enhanced systems monitor both the environment outside and the condition of people inside. Cars can recognize when a driver is distracted, drowsy, or even unwell and take steps such as suggesting a break or slowing down safely. Advanced external sensors combined with Nvidia’s AI help vehicles react faster to pedestrians, cyclists, and unpredictable events.

Beyond safety, the human-centered design shows up in how the AI interacts through voice, gestures, and even facial expressions. Instead of rigid commands, the car understands conversational language and subtle cues, which can lower stress and make the experience feel more personal. Hyundai emphasized that AI in mobility should not alienate users with overly technical behavior but rather feel like a companion that learns and improves over time.

Hyundai also highlighted accessibility as part of this effort. AI-driven assistance can help people with mobility challenges by customizing vehicle settings or enabling remote control features. For families, the system can adjust to different drivers, recognize children in the back seat, and proactively offer reminders or alerts.

By choosing CES 2025 as the venue for this announcement, Hyundai and Nvidia sent a clear message about the direction of consumer technology. CES has grown beyond gadgets and screens, becoming a showcase for how technology reshapes everyday life. Visitors at the event experienced live demonstrations of AI-powered vehicles navigating simulated urban settings and interacting with passengers naturally.

These demonstrations weren't just theoretical concepts. Hyundai and Nvidia presented real prototypes and outlined a roadmap for deployment in the next generation of vehicles starting in 2026. This timeline suggests that these AI capabilities will soon move from exhibition halls into city streets. Analysts at the show noted that this partnership positions Hyundai as a leader among automakers that are embracing AI at a meaningful scale, rather than as a marketing afterthought.

For Nvidia, CES 2025 highlighted its commitment to making AI more widely applicable beyond data centers and consumer electronics. The company showcased how its AI computing engines could process vast amounts of sensor data, manage real-time decision-making, and scale for millions of vehicles on the road. This collaboration with Hyundai underscores the potential of AI in mobility, not just as a feature but as a foundation for the future.

While the Hyundai-Nvidia partnership offers an exciting glimpse into the future, it doesn’t come without challenges. Integrating sophisticated AI into vehicles demands rigorous testing, regulatory approvals, and robust cybersecurity measures. Concerns about data privacy and how much personal information vehicles collect and process will need to be addressed clearly to gain consumer trust.

Hyundai executives acknowledged that building public confidence is just as important as technical innovation. They plan to roll out features gradually, starting with enhancements to existing driver-assistance systems before introducing fully adaptive AI experiences. Nvidia’s expertise in secure, efficient AI processing will be critical in this phased approach.

The Future Mobility Program also raises broader questions about the role of cars in society. As vehicles become more autonomous and interconnected, city infrastructure and traffic management systems will need to evolve alongside them. Hyundai indicated it is already working with city planners and technology providers to ensure that vehicles and urban environments can communicate seamlessly.

Longer term, this collaboration opens possibilities beyond personal cars. Hyundai and Nvidia are exploring AI solutions for public transportation, cargo fleets, and even air mobility. These extensions could redefine how goods and people move through both urban and rural spaces, reducing congestion and improving sustainability.

The Hyundai-Nvidia partnership unveiled at CES 2025 represents a meaningful step toward redefining mobility with artificial intelligence. Rather than treating AI as an add-on, Hyundai is making it the core of a new approach to transportation — one that adapts to people, enhances safety, and works seamlessly in complex environments. Nvidia’s expertise brings the computing power and intelligence needed to make this vision practical. As these ideas move from concept to road-ready, they promise to reshape how people experience travel, making it smarter and more responsive to human needs. This program reflects a shared belief that AI can help vehicles move beyond transportation, becoming thoughtful companions on the journey.

Advertisement

Nvidia is set to manufacture AI supercomputers in the US for the first time, while Deloitte deepens agentic AI adoption through partnerships with Google Cloud and ServiceNow

Struggling to connect tables in SQL queries? Learn how the ON clause works with JOINs to accurately match and relate your data

How LLMs and BERT handle language tasks like sentiment analysis, content generation, and question answering. Learn where each model fits in modern language model applications

Snowflake's acquisition of Neeva boosts enterprise AI with secure generative AI platforms and advanced data interaction tools

Watsonx AI bots help IBM Consulting deliver faster, scalable, and ethical generative AI solutions across global client projects

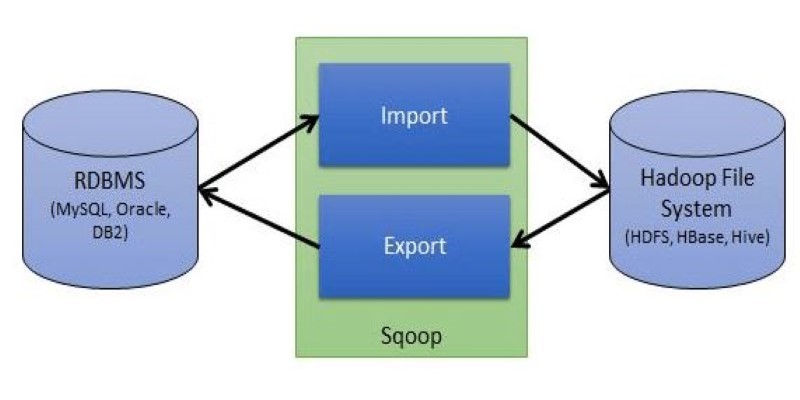

Explore Apache Sqoop, its features, architecture, and operations. Learn how this tool simplifies data transfer between Hadoop and relational databases with speed and reliability

Discover a clear SQL and PL/SQL comparison to understand how these two database languages differ and complement each other. Learn when to use each effectively

How AI is changing the future of work, who controls its growth, and the hidden role venture capital plays in shaping its impact across industries

How the Model-Connection Platform (MCP) helps organizations connect LLMs to internal data efficiently and securely. Learn how MCP improves access, accuracy, and productivity without changing your existing systems

IBM showcased its agentic AI at RSAC 2025, introducing a new approach to autonomous security operations. Learn how this technology enables faster response and smarter defense

Learn how to install, configure, and run Apache Flume to efficiently collect and transfer streaming log data from multiple sources to destinations like HDFS

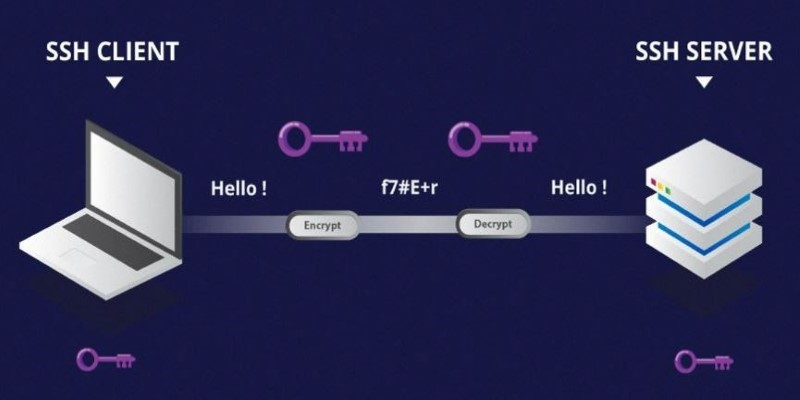

Learn the difference between SSH and Telnet in cyber security. This article explains how these two protocols work, their security implications, and why SSH is preferred today