Advertisement

One of the most crucial aspects of modern programming is runtime efficiency. Developers want software that performs reliably under pressure. Among many languages, C and Rust often dominate comparisons for raw processing power. For decades, C has powered demanding workloads, embedded systems, and operating systems. Rust is a newer language that rivals C's speed while adding modern features and memory safety.

Developers must examine runtime performance under real workloads to make informed choices. This comparison examines workload types, optimization strategies, and benchmarks. Runtime results reveal how each language handles heavy computation. These insights guide developers in selecting the best fit for future projects. This article examines the differences between C and Rust in execution and highlights trade-offs that extend beyond raw speed.

How long it takes for code to run from beginning to end is measured by execution time. Even milliseconds matter in competitive settings. Improved scalability, increased efficiency, and more seamless user experiences are all correlated with faster runtimes. Engineers often compare execution times to identify bottlenecks. Consistent compiler settings, hardware, and inputs are necessary for a fair comparison. Algorithms, memory usage, and data size all affect the results.

Repeated testing produces stable averages for accurate measurements. Faster execution has a direct impact on results in industries like robotics, gaming, and finance. Developers utilize timing data to optimize apps, minimize delays, and improve performance. The performance of both C and Rust under various workloads is a major deciding factor. One of the numerous metrics that influence software quality and project success is execution speed.

C is still renowned for its raw speed and efficiency. C programs compile into effective binaries with little overhead. The language's direct memory management allows developers to optimize execution. C frequently leads benchmarks for basic algorithms, such as recursion and sorting. Due to its adaptability, customization is possible through low-level access, which accelerates results.

For optimal performance, C is still used by many kernels, databases, and operating systems. Proficient programmers reduce execution time by leveraging pointers and arrays. Writing subpar C code, however, can result in errors or inefficiencies that impede performance. Optimization decisions and developer experience play a major role in performance gains. In some workloads where raw speed is the most important factor, C still outperforms other languages despite its age. After decades of real-world application, its reputation is still linked to consistent execution.

Rust was developed to eliminate memory safety concerns and match C-level speed. It ensures competitive runtime results by compiling into optimized machine code. Rust enforces ownership rules to prevent leaks and unauthorized access, replacing manual memory handling. Rust routinely approaches C in the majority of workloads without observable slowdowns, according to benchmarks. Developers can utilize high-level features without compromising runtime efficiency thanks to zero-cost abstractions.

One of Rust's greatest advantages is its support for concurrency, which enables safe parallel execution on modern hardware. Without performance-degrading competition conditions, developers can write dependable multi-threaded code. The generated binaries frequently operate as efficiently as C, even though compilation may take longer. Performance and safety assurances are combined to create Rust's execution strength. Industries looking for speed and dependability in critical systems are drawn to this dual benefit. Rust demonstrates performance gains through modern design while avoiding common pitfalls of older languages.

Direct comparisons highlight the similarities and differences between the two languages. C frequently performs marginally faster in simple algorithmic tests, such as Fibonacci sequences. Rust maintains consistency without significant differences for larger tasks, such as file input or matrix multiplication. While some workloads favor Rust in concurrency, others show C ahead by a few microseconds. Rust still takes longer to compile, but its runtime performance is constantly competitive.

Despite minor performance trade-offs, security-focused developers frequently favor Rust. Teams requiring maximum predictability, especially in embedded systems, often choose C. Benchmarks confirm that neither language is always dominant in every category. Workload type and coding style have a greater influence on performance gaps than the language itself. Both are suitable for both large-scale and critical projects, as they consistently achieve near-native speeds.

Performance depends more on optimization than on the language itself. C programmers rely on pointer arithmetic, memory alignment, and algorithm refinements to boost speed. Compiler flags such as -O3 help binaries run more efficiently. Developers also improve runtime by designing efficient loops and reducing memory allocations. Rust developers also optimize their code. By requiring safe access at compile time, the borrow checker lowers hidden runtime expenses. For additional development, profiling tools help identify bottlenecks in both languages.

Another layer is added by concurrency, where Rust streamlines parallel execution without typical pitfalls. Threading in C must be handled carefully to prevent undefined behavior that can slow down the system. Language differences are frequently outweighed by algorithmic efficiency, underscoring the significance of design. More performance gains are unlocked by developers who frequently profile, optimize, and test their code. By producing outcomes more quickly and reliably, both languages reward optimization discipline.

It takes more than just examining raw speed data to decide between C and Rust. In domains where complete control is necessary, such as embedded systems and kernels, C remains the dominant language. For projects requiring long-term maintainability, concurrency, and safety, Rust excels. Developers weigh trade-offs between Rust's safer memory management and C's marginally faster runtimes. C might be a better choice for short-term projects that require speed.

Rust often proves to be more sustainable for long-term software development. It supports projects that demand dependability. Using well-known tools, teams with extensive C knowledge may produce results more quickly. Rust's protective features may be more advantageous for teams starting from scratch. Neither language is always better in every situation. Workload, safety requirements, and development objectives all influence which option is the better choice. Execution speed is important, but real-world use cases ultimately determine the choice.

In practical workloads, both C and Rust deliver impressive runtime efficiency. C often runs faster in raw benchmarks, particularly with simpler tasks. Rust, however, combines near-equal performance with memory safety and strong support for concurrency. C remains the most predictable choice for applications demanding maximum speed, while Developers should consider the type of workload, safety needs, and team expertise before making a decision. Execution time alone cannot dictate the choice. Each language offers distinct strengths, and aligning these with project requirements ensures the best outcome. By striking a balance between speed and safety, developers can achieve reliable, high-performance results across various industries.

Advertisement

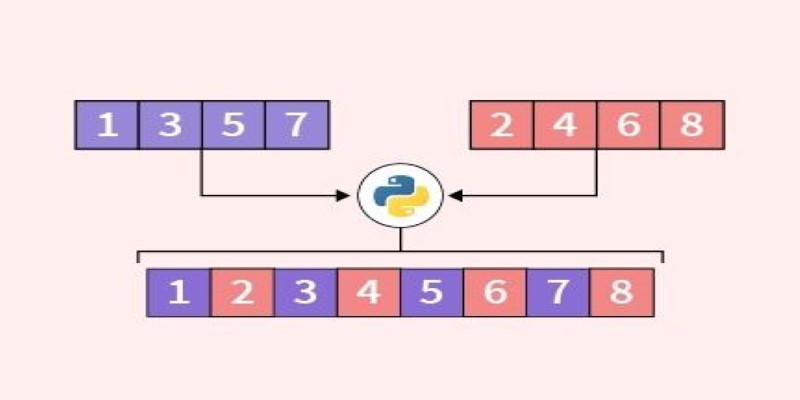

Looking for the best way to merge two lists in Python? This guide walks through ten practical methods with simple examples. Whether you're scripting or building something big, learn how to combine lists in Python without extra complexity

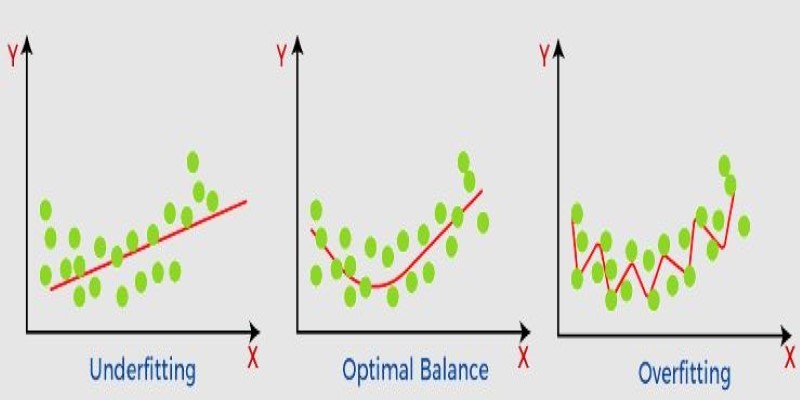

What non-generalization and generalization mean in machine learning models, why they happen, and how to improve model generalization for reliable predictions

How AI middle managers tools can reduce administrative load, support better decision-making, and simplify communication. Learn how AI reshapes the role without replacing the human touch

AI-first devices are reshaping how people interact with technology, moving beyond screens and apps to natural, intelligent experiences. Discover how these innovations could one day rival the iPhone by blending convenience, emotion, and AI-driven understanding into everyday life

Discover a clear SQL and PL/SQL comparison to understand how these two database languages differ and complement each other. Learn when to use each effectively

Achieve lightning-fast SetFit Inference on Intel Xeon processors with Hugging Face Optimum Intel. Discover how to reduce latency, optimize performance, and streamline deployment without compromising model accuracy

Nvidia is set to manufacture AI supercomputers in the US for the first time, while Deloitte deepens agentic AI adoption through partnerships with Google Cloud and ServiceNow

Learn the top 5 AI change management strategies and practical checklists to guide your enterprise transformation in 2025.

How AI is changing the future of work, who controls its growth, and the hidden role venture capital plays in shaping its impact across industries

Speed up your deep learning projects with NVIDIA DGX Cloud. Easily train models with H100 GPUs on NVIDIA DGX Cloud for faster, scalable AI development

Nvidia NeMo Guardrails enhances AI chatbot safety by blocking bias, enforcing rules, and building user trust through control

Discover the exact AI tools and strategies to build a faceless YouTube channel that earns $10K/month.