Advertisement

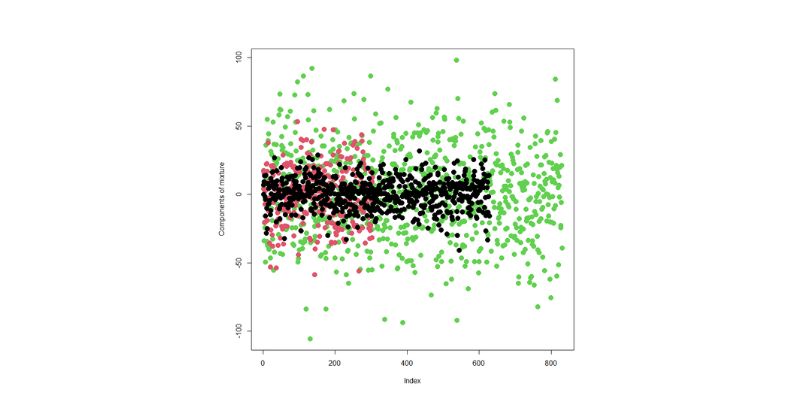

We can confidently make predictions and explain the relationships between variables with the aid of regression models. In data science, several models are often developed for the same dataset. Each may rely on different variables, transformations, or assumptions. Selecting the appropriate model is crucial, as a poor choice can lead to erroneous findings.

Statistical significance helps determine whether observed differences reflect genuine relationships or are merely due to chance. In the absence of significance testing, a model might seem robust but produce predictions that are not trustworthy. Students, researchers, and analysts require precise comparison techniques. Tests, criteria, and interpretation help identify the stronger model. This guide provides step-by-step instructions for evaluating regression models using simple methods.

Statistical significance indicates whether observed results are due to chance or genuine patterns. It indicates which of the two regression models is more effective at explaining the relationships. A small p-value typically shows a significant difference. For instance, strong evidence is frequently indicated by a p-value less than 0.05. Then, researchers are aware that the model's results are not random. Determining significance also involves regression coefficients.

The model provides accurate estimates if the coefficients are statistically significant. Conversely, high p-values reduce confidence. Always keep in mind that significance does not equate to actual importance. Instead, it gauges the probability of spotting patterns in the data. When choosing between competing regression models in practice, knowing this helps prevent mistakes.

Model comparison is based on hypothesis testing. Analysts frequently use it to test hypotheses. Typically, the null hypothesis assumes that two models are identical. A different hypothesis assumes that one model is superior. These assumptions can be verified using a t-test or an F-test. The F-test uses the variance explained to compare overall model fit. One model fits better if the test results are significant. T-tests look at each model's individual coefficients.

Significant results suggest stronger predictor variables. When assessing models, these tests eliminate uncertainty. The decision is supported by evidence rather than just gut feeling. Thus, hypothesis testing offers a methodical approach to identifying the more robust regression model. Better conclusions and well-informed choices are the outcome of dependable results.

R-squared quantifies the extent to which predictors account for the variance in the dependent variable. Higher R-squared values typically indicate a better fit. R-squared, however, can be inflated by additional predictors, rendering it deceptive. Unnecessary predictors are corrected for using adjusted R-squared. It rewards simplicity and penalizes complexity. Adjusted R-squared provides more precise information when comparing two models.

A model usually provides stronger explanatory power with a higher adjusted R-squared. It strikes a balance between precision and economy. Analysts should interpret R-squared in conjunction with other metrics, rather than relying solely on it. Both values show how well models capture relationships and serve as a guide for comparison. By taking these precautions, overfitting can be prevented, and the most statistically significant regression model can be found. This thorough assessment guarantees reliable findings for study and use.

The likelihood ratio test is practical when models are nested, which means that one model is a more straightforward version of another. It examines whether a significant improvement in fit can be achieved by including additional predictors. Log-likelihood values from various models are compared in the test. A noteworthy outcome indicates that the more intricate model is superior.

The simpler model is better if the results are not significant. It avoids adding extraneous variables that do not improve predictions. In situations like logistic regression, the likelihood ratio test is frequently employed. It highlights instances where adding complexity enhances accuracy without making the model overly complicated. This method simplifies the process of selecting between nested models. Using evidence-based decisions, informed by statistical testing, gives researchers greater confidence. When applied correctly, comparison results are transparent and accurate.

Regression models can also be compared using information criteria. Model quality is assessed using the Bayesian Information Criterion (BIC) and the Akaike Information Criterion (AIC). Both take complexity and fit into consideration. Lower AIC or BIC values indicate a better model. They stop overfitting by penalizing models with superfluous predictors. While BIC more strongly rewards simplicity, AIC frequently favors more complex models.

Examining both models when comparing them helps to strike a balance in decision-making. For transparency, analysts frequently report both AIC and BIC. For non-nested models in particular, these criteria are helpful. They supplement R-squared values and hypothesis testing. By using information criteria, model evaluation ensures that simplicity and accuracy are taken into account. This method helps select the most statistically sound regression model.

Only when carefully interpreted can statistical results be beneficial. Even a highly significant model requires practical applicability. Analysts need to relate numbers to real-world applications. A model might be statistically superior but provide little practical benefit. When making decisions, always consider whether improvements are essential. Statistical significance does not always mean practical usefulness.

Alongside tests, consider predictive power and effect size. Inform stakeholders of the results in plain language. Emphasize the advantages of one model without using too many technical terms. Transparency promotes evidence-based decision-making and fosters trust and confidence. Thorough interpretation ensures the chosen model is both useful and statistically valid. When comparing regression models, this harmony between application and numbers is crucial.

It takes methodical procedures and careful interpretation to compare regression models with statistical significance. Contributions include likelihood ratio tests, information criteria, R-squared values, and hypothesis testing. Analysts must balance the real-world relevance of their findings with validity checks. The optimal option blends accurate forecasting, significant connections, and unambiguous interpretation. These techniques enable analysts and researchers to make decisions with assurance. A proper comparison ensures meaningful rather than random results. The best regression model can be found by using interpretation and significance tests. In every research or analysis task, statistical reasoning aids in decision-making, clarity, and accuracy.

Advertisement

Learn how integrating feature selection into model estimation improves accuracy, reduces noise, and boosts efficiency in ML

Discover the exact AI tools and strategies to build a faceless YouTube channel that earns $10K/month.

How serverless GPU inference is transforming the way Hugging Face users deploy AI models. Learn how on-demand, GPU-powered APIs simplify scaling and cut down infrastructure costs

How using open-source AI models can give your startup more control, lower costs, and a faster path to innovation—without relying on expensive black-box systems

Sam Altman returns as OpenAI CEO amid calls for ethical reforms, stronger governance, restored trust in leadership, and more

Get full control over Python outputs with this clear guide to mastering f-strings in Python. Learn formatting tricks, expressions, alignment, and more—all made simple

Learn how the healthcare, marketing, finance, and logistics industries apply generative AI to achieve their business goals

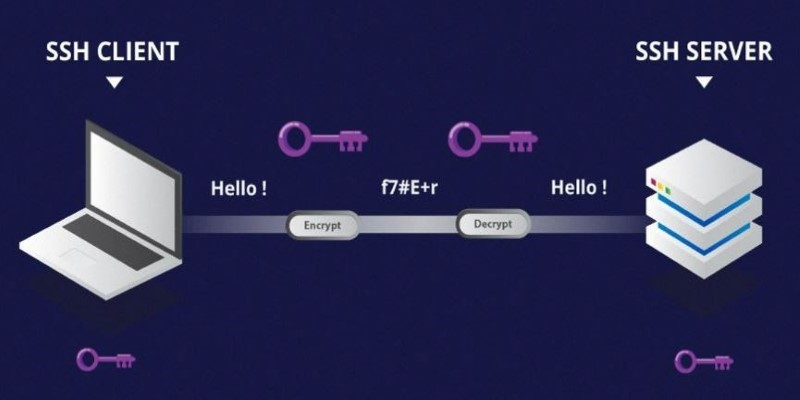

Learn the difference between SSH and Telnet in cyber security. This article explains how these two protocols work, their security implications, and why SSH is preferred today

Nvidia is set to manufacture AI supercomputers in the US for the first time, while Deloitte deepens agentic AI adoption through partnerships with Google Cloud and ServiceNow

Can small AI agents understand what they see? Discover how adding vision transforms SmolAgents from scripted tools into adaptable systems that respond to real-world environments

How MPT-7B and MPT-30B from MosaicML are pushing the boundaries of open-source LLM technology. Learn about their architecture, use cases, and why these models are setting a new standard for accessible AI

How does Docmatix reshape document understanding for machines? See why this real-world dataset with diverse layouts, OCR, and multilingual data is now essential for building DocVQA systems