Advertisement

Sam Altman has returned as OpenAI's CEO following a dramatic leadership shakeup. His unexpected departure sparked widespread concern across the tech industry. Yet the board's decision to reinstate him did not resolve all underlying tensions. Key issues—like ethical AI, decision-making, and governance—remain unresolved. Though not a complete solution, many view Altman's return as a step forward.

Concerns about transparency and board accountability persist. As OpenAI rapidly advances its AI technology, stronger checks and balances are urgently needed. Stakeholders are calling for clearer communication and greater oversight. With Altman back in place, OpenAI must work to rebuild public trust. Balancing innovation with responsibility is now more important than ever. These events highlight the urgent need for ethical leadership in AI.

Recent leadership turmoil at OpenAI exposed deeper issues in organizational governance. Sam Altman's sudden dismissal shocked both investors and employees. The board's failure to explain caused widespread confusion and frustration. Internal communication sparked panic and lacked transparency across departments. Many employees criticized the board's secretive handling of the situation, which fueled further mistrust. Several senior leaders, among others, began resigning in rapid succession.

The unrest peaked when nearly 700 employees signed a letter demanding Altman's return, highlighting loyalty to him while also revealing serious governance flaws. OpenAI now faces pressure to improve how decisions are made and communicated. Avoiding similar crises will require stronger internal procedures and clearly defined responsibilities. Stakeholders are urging reforms that promote accountability, openness, and inclusive decision-making. While Altman's return eased some of the unrest, it did not resolve structural weaknesses. Long-term stability depends on consistent governance practices and transparent communication moving forward.

The sudden leadership change significantly impacted OpenAI's employees and investors. Many staff members were shocked and deeply concerned about the company's future direction. Unclear communication led to confusion and a noticeable drop in morale. Amid rising concern over the board's actions, employees rallied behind Sam Altman. More than 700 employees even threatened to resign unless he was reinstated. Their unified stance placed strong pressure on the board to reverse its decision. This remarkable show of loyalty highlighted not only Altman's influence but also the staff's dissatisfaction with governance.

Investors also played a crucial role in shaping the outcome. Major partners, including Microsoft, expressed unease over the instability of leadership. The situation emphasized how much OpenAI's stability relies on consistent and trusted leadership. The company must rebuild internal and external trust to maintain innovation. Without a clear vision and stable governance, employee confidence and investor backing will remain fragile.

Sam Altman's return to OpenAI highlights the rapid progress of artificial intelligence, but it also reinforces the growing concerns around ethics and safety. As OpenAI expands at an unprecedented pace, questions about responsible innovation and transparency continue to emerge. Many experts are increasingly alarmed by the speed of AI deployment and call for stronger ethical guidelines. Critics argue that OpenAI's shifting leadership reflects deeper uncertainties about its mission and direction. Some point out that the organization's dual mandate—pursuing profit and research—creates potential conflicts of interest.

Altman has to immediately underline the company's dedication to open and safe artificial intelligence. It will be challenging to balance ethical concerns with business success. Board decisions have to support responsibility as well as invention. Data privacy, model bias, and threats to society in the long term are among the ethical issues. Though OpenAI has promised improved control, trust has to be established. Watching carefully are outside partners and researchers. The next actions of OpenAI will affect public opinion. One of the toughest challenges for Altman's fresh leadership is matching corporate aims with public safety. Nowadays, a firmer ethical basis is more crucial than ever.

Restoring Sam Altman did not automatically restore public confidence in OpenAI. Many still question the reasons behind his sudden dismissal. Ongoing speculation continues to damage the company's reputation without full transparency. As a leader in responsible AI innovation, OpenAI faces high expectations from the broader industry. Public trust is essential to sustaining long-term research and collaboration. To move forward, OpenAI must demonstrate that its internal changes have led to greater stability. Clear and consistent communication has become more critical than ever. Stakeholders now expect regular updates, transparency, and accountability.

Media coverage of the leadership change has sharpened focus. Restoring reputation calls for both constant action and time. Cooperation with corporations like Microsoft depends on consistent leadership. OpenAI has to reassure the sector of its long-term perspective. Reforms in culture as much as in structure are required. Among those are open government, ethical leadership, and clear decision-making. OpenAI can only rebuild lost credibility and lead with intention only then. Nowadays, Altman's leadership is under more observation than ever.

OpenAI has to rethink its objectives and direction, and now Altman is back. The company is in a vital phase of growth. Business decisions and research need strategic clarity to direct them. Still a major concern, innovation has to fit public interest. The speed must be balanced in leadership with accountability. Teams have to feel internally consistent and supported. Altman has to foster ethical discourse and feedback in his society. Transparency and responsibility should abound on OpenAI's roadmap.

Fairness, model interpretability, and artificial intelligence safety should be research priorities. Clear values should guide all future growth. Strong bases are also required for outside cooperation. Public confidence and strong inside systems are what define long-term success. Investors seek observable improvement in compliance and safety. Workers yearn for purpose and work stability. The next stage is rebuilding direction and confidence, and it is not only about leadership. Given Open AI's worldwide influence, strategic planning becomes especially important under Altman's fresh leadership.

Sam Altman's return has brought stability, but underlying challenges remain. OpenAI must work to rebuild trust both internally and externally. Its long-term success hinges on strong governance and a firm commitment to ethical standards. Balancing innovation with accountability will put Altman's leadership to the test. The company must implement better communication strategies and strengthen its organizational structure. Restoring investor and public confidence will require consistent, long-term effort. These days, stakeholders want responsibility rather than only progress. The future of Open AI hinges on stable leadership and ethical expansion. Altman's comeback starts a new chapter, but OpenAI's problems are far from gone.

Advertisement

At CES 2025, Hyundai and Nvidia unveiled their AI Future Mobility Program, aiming to transform transportation with smarter, safer, and more adaptive vehicle technologies powered by advanced AI computing

Wondering whether a data lake or data warehouse fits your needs? This guide explains the differences, benefits, and best use cases to help you pick the right data storage solution

Discover execution speed differences between C and Rust. Learn benchmarks, optimizations, and practical trade-offs for developers

Speed up your deep learning projects with NVIDIA DGX Cloud. Easily train models with H100 GPUs on NVIDIA DGX Cloud for faster, scalable AI development

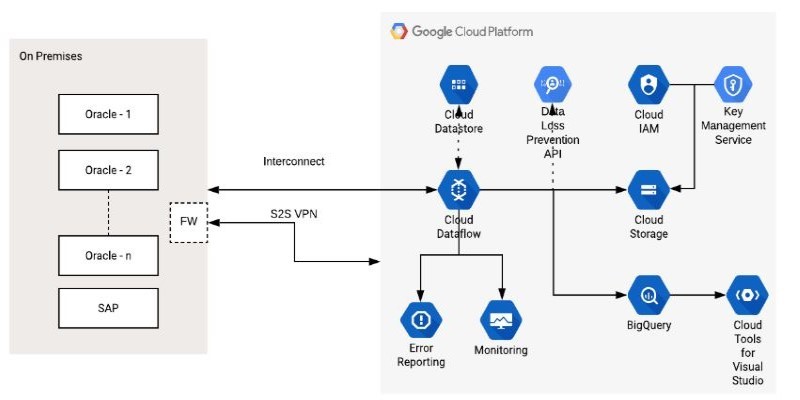

How the Google Cloud Dataflow Model helps you build unified, scalable data pipelines for streaming and batch processing. Learn its features, benefits, and connection with Apache Beam

Google risks losing Samsung to Bing if it fails to enhance AI-powered mobile search and deliver smarter, better, faster results

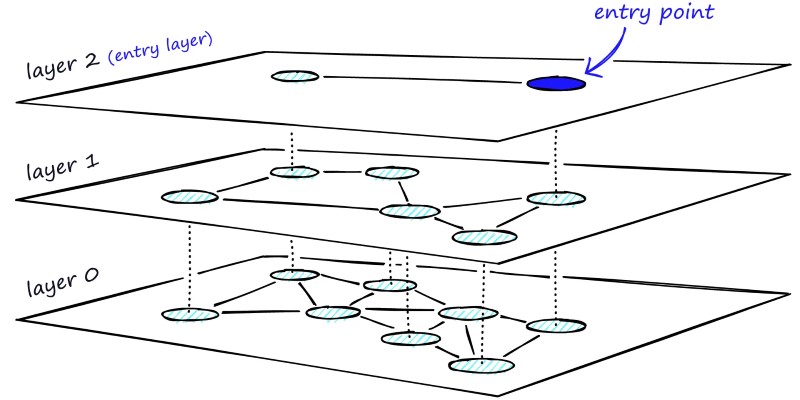

Learn how HNSW enables fast and accurate approximate nearest neighbor search using a layered graph structure. Ideal for recommendation systems, vector search, and high-dimensional datasets

Can a small language model actually be useful? Discover how SmolLM runs fast, works offline, and keeps responses sharp—making it the go-to choice for developers who want simplicity and speed without losing quality

How AI middle managers tools can reduce administrative load, support better decision-making, and simplify communication. Learn how AI reshapes the role without replacing the human touch

Learn how to compare two regression models with statistical significance for accuracy, reliability, and better decision-making

AI-first devices are reshaping how people interact with technology, moving beyond screens and apps to natural, intelligent experiences. Discover how these innovations could one day rival the iPhone by blending convenience, emotion, and AI-driven understanding into everyday life

How MPT-7B and MPT-30B from MosaicML are pushing the boundaries of open-source LLM technology. Learn about their architecture, use cases, and why these models are setting a new standard for accessible AI