Advertisement

Feature selection is a crucial step in developing effective machine learning models. Raw datasets often include irrelevant, redundant, or noisy variables, which can reduce accuracy, increase complexity, and slow down training. By incorporating selection into the estimation process, only the most useful predictors are retained. It leads to stronger forecasts, more straightforward interpretation, and more efficient performance.

Integration aligns both tasks, rather than treating them as separate stages. Models can then adapt, refine, and optimize their structure naturally. For businesses and researchers, the benefits are clear—lower costs, faster insights, and more dependable outcomes. This guide examines the methods, challenges, and real-world applications of integrating feature selection with model estimation to achieve improved results.

The process of identifying the most significant variables in a dataset is known as feature selection. Eliminating redundant, misleading, or irrelevant information is the primary objective. By doing this, predictive accuracy is significantly increased, and model overfitting is reduced. Because it aligns the training process with the actual signals in the data, feature selection becomes crucial in estimation.

Models may find it difficult to generalize without it. Time, memory, and resources are saved when selection and estimation are combined. It facilitates the simplification of evaluation and preprocessing. For example, when only the most important predictors are left, a logistic regression model performs better. Limiting features also make it easier for researchers to explain model results. All things considered, feature selection ensures that estimation represents reality rather than noise.

Integrating feature selection into model estimation offers several key advantages for both practitioners and organizations. By focusing on the most relevant variables, models achieve stronger predictive accuracy. With fewer features, training and evaluation cycles run faster, which reduces computational costs. Simpler structures also make models easier to interpret and more transparent, addressing stakeholder concerns about clarity.

Integration further lowers the risk of multicollinearity, a common issue in regression-based systems. Filtering out misleading signals strengthens resilience and leads to more dependable performance. When estimation and selection are handled together, development cycles become shorter, enabling quicker decision-making. Academic research also benefits from reproducible results that reinforce credibility. Overall, this combined approach allows high performance while maintaining models that are practical, reliable, and cost-effective.

Feature selection can be directly incorporated into estimation workflows using various techniques. The coefficients of less significant predictors are penalized by regularization methods such as Lasso and Elastic Net. They consequently automatically reduce unimportant features to zero. By dividing on the most informative variables, decision tree algorithms like Random Forests carry out built-in selection.

During training, dimensionality reduction is also managed by Support Vector Machines with embedded kernels. During estimation, Bayesian methods prune features that are not relevant by assigning them prior probabilities. Evolutionary algorithms refine subsets by applying principles of optimization. Wrapper models, which iteratively assess features during training, are another technique. For well-rounded outcomes, hybrid approaches combine wrappers, embedded methods, and filters. Every technique has unique advantages. Aligning selection tactics with the model type and business goals is crucial.

Integration offers numerous advantages, but it also presents particular challenges. Overfitting can occur when selection becomes too aggressive. Removing too many predictors risks losing valuable signals hidden in the data. Massive datasets also increase computational costs, especially when wrapper-based methods are applied. High-dimensional approaches, such as deep learning, make interpretability more difficult.

Another challenge is inconsistency, as different models may select other features from the same dataset. It complicates deployment across multiple systems. Bias can occur if selection favors data imbalances over genuine signals. Choosing between automated techniques and domain expertise also creates tension. Finally, successful integration requires careful tuning of hyperparameters. Without proper adjustments, results may decline rather than improve.

In many different industries, feature selection is incorporated into estimation. A few, but very important, biomarkers are used in healthcare models to predict disease. To accurately assess risk, credit scoring in the financial industry relies on a select group of economic indicators. Integrated selection allows marketing teams to examine campaign performance using fewer metrics. Predictive maintenance models in manufacturing use machine data streams to find key signals.

Energy companies use feature selection to improve supply and demand forecasting. Educational platforms utilize it to analyze student performance, with an emphasis on key learning behaviors. By identifying the most essential travel patterns, transportation models simplify the system. Estimation with specific features enhances intrusion detection and fraud detection systems in cybersecurity. These applications demonstrate the strength of integration. Efficiency and performance are rising in tandem across sectors.

Structured procedures are necessary for the successful integration of feature selection into the estimation process. The first step is to preprocess the data to remove evident noise. Automated techniques should be combined with domain knowledge to extract nuanced insights. Cross-validation is crucial for avoiding overfitting and accurately assessing performance. It is also essential to monitor computational efficiency by tracking resource use and time consumption.

When applying models that use regularization or penalties, hyperparameters must be carefully adjusted. To maintain transparency, each step of the selection process should be documented. A balance between simplicity and accuracy ensures interpretability. Before deployment, selected features should be validated on separate datasets. As data evolves, the chosen features need to be reviewed regularly. Finally, integration strategies should align with business goals. Following these practices ensures consistent results and makes feature selection within estimation precise, transparent, and practical.

Incorporating feature selection into model estimation transforms machine learning workflows. It clarifies results, reduces unnecessary variables, and enhances predictive accuracy. This integration also strengthens resilience against noise while lowering computational costs. Real-world applications already show clear value in healthcare, finance, and other industries. Researchers and organizations benefit from combining selection with estimation in a single, streamlined process. Performance and efficiency combine to strengthen the reliability of data-driven systems. With integrated strategies, professionals maintain models that are flexible, cost-effective, and relevant to real-world scenarios. The approach delivers stronger outcomes for predictive modeling and more reliable insights for business decision-making.

Advertisement

What's changing inside your car? A new AI platform is making in-car assistants smarter, faster, and more human-like—here's how it works

Discover the best AI art generator tools available today. Learn how you can create AI art from text prompts using powerful, easy-to-use platforms suited for beginners and pros alike

Can small AI agents understand what they see? Discover how adding vision transforms SmolAgents from scripted tools into adaptable systems that respond to real-world environments

Learn how the healthcare, marketing, finance, and logistics industries apply generative AI to achieve their business goals

Can AI really help a Formula One team build faster, smarter cars? With real-time data crunching, simulation, and design automation, teams are transforming racing—long before the track lights go green

How Edge AI is reshaping how devices make real-time decisions by processing data locally. Learn how this shift improves privacy, speed, and reliability across industries

How benchmarking text generation inference helps evaluate speed, output quality, and model inference performance across real-world applications and workloads

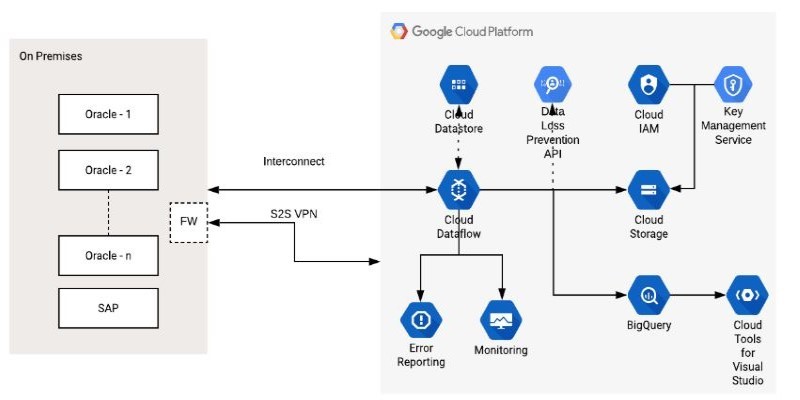

How the Google Cloud Dataflow Model helps you build unified, scalable data pipelines for streaming and batch processing. Learn its features, benefits, and connection with Apache Beam

How serverless GPU inference is transforming the way Hugging Face users deploy AI models. Learn how on-demand, GPU-powered APIs simplify scaling and cut down infrastructure costs

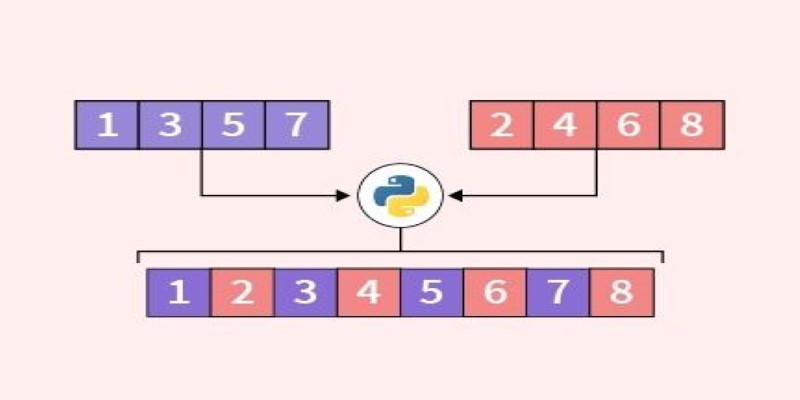

Looking for the best way to merge two lists in Python? This guide walks through ten practical methods with simple examples. Whether you're scripting or building something big, learn how to combine lists in Python without extra complexity

Discover ten easy ways of using ChatGPT to analyze and summarize complex documents with simple ChatGPT prompts.

Curious how LLMs learn to write and understand code? From setting a goal to cleaning datasets and training with intent, here’s how coding models actually come together