Advertisement

Artificial intelligence has always been tied to the cloud — collecting data, sending it off for analysis, then waiting for instructions. But that pattern is shifting. Edge AI is changing how machines operate by giving them the ability to process data right where it’s created. It cuts down on delays, limits how much information leaves your device, and works even without a reliable internet connection. From phones and vehicles to factories and farms, devices are starting to think for themselves. Edge AI isn’t about doing more — it’s about doing things smarter, faster, and closer to where they matter.

Edge AI combines AI models with edge computing so that devices like phones, cameras, sensors, and machines can make decisions without relying on the cloud. Instead of sending data to faraway servers for processing, the system analyzes and acts on information directly on the device.

Take voice recognition as an example. In early models, audio data had to be sent to a server for interpretation. Now, newer voice assistants can process commands on the device itself. This shift is possible thanks to efficient, lightweight AI models that run on smaller hardware without needing huge amounts of power or memory.

The process usually begins with data from sensors or input from users. A trained AI model evaluates this data locally and makes decisions — like recognizing faces, tracking movement, or flagging equipment issues. Some devices still share selected information with the cloud for storage or further analysis, but most of the work happens on the spot.

This local processing depends on specialized components such as Google Coral, Apple’s Neural Engine, and NVIDIA’s Jetson series. These chips are designed for speed and efficiency, letting machines analyze images, detect patterns, or make predictions within milliseconds. They run pre-trained models optimized for edge environments, where space and energy are limited.

Because these models must be compact and fast, developers often use simplified neural networks or frameworks like TensorFlow Lite and PyTorch Mobile. These are tailored for edge use, enabling real-time processing without overloading the device.

One of Edge AI’s biggest advantages is its low latency. Since data doesn’t have to travel to the cloud and back, devices can respond instantly. In environments like autonomous vehicles, that kind of real-time processing is not just helpful — it’s necessary. A car making quick decisions about road conditions, obstacles, or traffic signals can’t afford to wait for remote instructions.

Privacy is another strength. Data stays on the device rather than being shared over the internet, reducing exposure and helping comply with local data regulations. For example, a smart doorbell using Edge AI can recognize familiar faces without storing those images online. Hospitals and clinics benefit too — medical devices with local AI can monitor vital signs or detect symptoms while keeping patient information secure.

Bandwidth savings also matter. When thousands of devices operate together — say, in a smart factory or city — constantly uploading data would strain the network. Edge AI avoids this by doing most of the work locally and only sending out essential updates. This makes large-scale operations more efficient and reliable.

Across industries, Edge AI is already in action. In agriculture, sensors check soil moisture and crop health, giving farmers immediate feedback without needing strong internet coverage. In industrial settings, AI-enabled machines detect wear or irregularities, helping avoid breakdowns before they happen. Retail stores use it for shelf monitoring, theft detection, and customer movement tracking — all without sending video feeds to the cloud.

Even in homes, Edge AI has a growing role. Phones now use it for photo enhancement, battery optimization, and personal safety features. Smart thermostats learn household habits and adjust settings on their own. These functions happen without constant internet access or outside servers watching.

Despite its promise, Edge AI has a few hurdles to clear. A major one is hardware limitation. Edge devices are smaller and run on less power than cloud servers, so fitting high-performing AI models into them requires careful design. Developers often have to reduce the size and complexity of models while keeping them accurate — a tough balance to strike.

Another challenge is keeping models updated. Many edge devices operate in areas with limited or no connectivity, making over-the-air updates difficult. If a model needs improvement, pushing updates across thousands of distributed devices takes planning and secure communication systems.

Security also remains a concern. Edge devices may store private data or perform sensitive tasks. If not well-protected, they could be hacked or tampered with. Some solutions include hardware-level encryption or secure boot methods, but these increase costs and complexity.

There’s also the matter of interoperability. Different devices, vendors, and platforms don’t always work well together. Creating a unified framework where edge and cloud systems cooperate smoothly is still a work in progress. Some efforts are underway to develop open standards, but adoption is uneven.

Even so, the technology is moving quickly. The cost of AI hardware is dropping, and tools for deploying edge models are becoming easier to use. Governments, startups, and large tech companies are all investing in edge infrastructure. As these systems mature, they’ll be able to support a wider range of use cases in more settings.

What makes Edge AI especially promising is its flexibility. It doesn’t aim to replace the cloud, but to complement it. The cloud is still great for training large models or coordinating global data. Edge devices, on the other hand, are better for fast, local decisions and reducing dependency on central servers.

Edge AI is shaping a future where devices respond faster and rely less on remote servers to function. By allowing machines to analyze data and make decisions locally, it creates more efficient, private, and responsive systems. This shift is especially valuable as internet infrastructure becomes more crowded and users demand greater privacy. Instead of passively collecting information, devices now understand and act in real time. They don’t need to constantly send data away to be useful. This new level of autonomy makes technology feel more natural and seamless. Edge AI marks a clear move toward smarter, self-reliant tools built for real-world use.

Advertisement

How AI middle managers tools can reduce administrative load, support better decision-making, and simplify communication. Learn how AI reshapes the role without replacing the human touch

How the Model-Connection Platform (MCP) helps organizations connect LLMs to internal data efficiently and securely. Learn how MCP improves access, accuracy, and productivity without changing your existing systems

AI-first devices are reshaping how people interact with technology, moving beyond screens and apps to natural, intelligent experiences. Discover how these innovations could one day rival the iPhone by blending convenience, emotion, and AI-driven understanding into everyday life

Achieve lightning-fast SetFit Inference on Intel Xeon processors with Hugging Face Optimum Intel. Discover how to reduce latency, optimize performance, and streamline deployment without compromising model accuracy

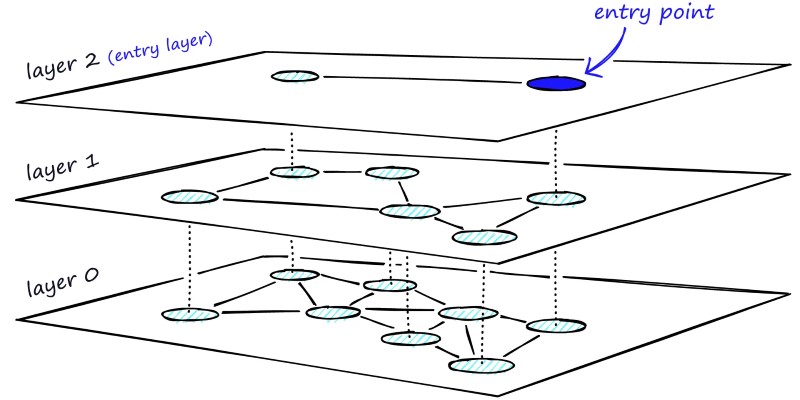

Learn how HNSW enables fast and accurate approximate nearest neighbor search using a layered graph structure. Ideal for recommendation systems, vector search, and high-dimensional datasets

How LLMs and BERT handle language tasks like sentiment analysis, content generation, and question answering. Learn where each model fits in modern language model applications

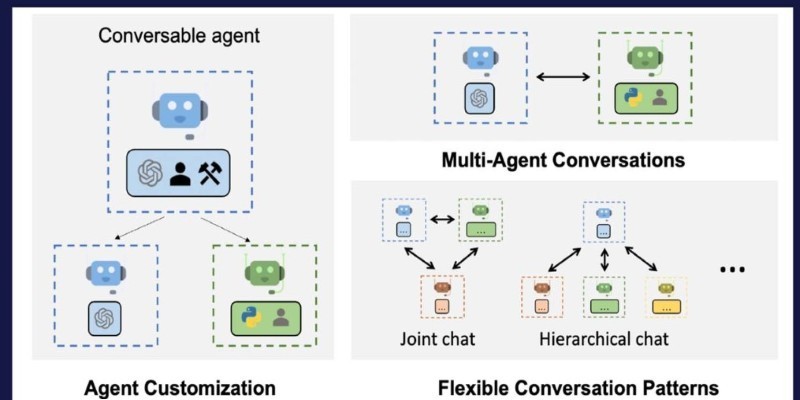

How Building Multi-Agent Framework with AutoGen enables efficient collaboration between AI agents, making complex tasks more manageable and modular

Is the future of U.S. manufacturing shifting back home? Siemens thinks so. With a $190M hub in Fort Worth, the company is betting big on AI, automation, and domestic production

Nvidia NeMo Guardrails enhances AI chatbot safety by blocking bias, enforcing rules, and building user trust through control

Struggling to connect tables in SQL queries? Learn how the ON clause works with JOINs to accurately match and relate your data

Discover the latest machine learning salary trends shaping 2025. Explore how experience, location, and industry impact earnings, and learn why AI careers continue to offer strong growth and global opportunities for skilled professionals

Learn how to compare two regression models with statistical significance for accuracy, reliability, and better decision-making