Advertisement

AI is changing how work gets done, but it’s people who feel the shift most. For many workers, the arrival of AI brings up more than just curiosity; it brings uncertainty. Will their role shrink? Will they be left behind? Too often, the rollout of AI feels cold and distant, focused on tools instead of trust.

But real progress happens when companies see their employees not as obstacles to automate around, but as the core of what makes AI work. Supporting people through this change isn't optional; it's what determines whether AI strengthens the workplace or divides it.

AI projects often begin with development, not discussion. Teams work in private, testing tools and designing workflows, only to announce them later without explanation. This leaves workers guessing. Clear, early communication helps prevent that. Employees should hear directly why AI is being introduced—whether it's to reduce repetitive tasks, improve service accuracy, or help with decision-making.

Trust builds through conversation, not commands. Regular updates, small group chats, and open forums go further than a single announcement. Questions deserve honest answers. Concerns should be acknowledged. Feedback needs to be heard, not just collected. When people feel informed, they feel involved. They stop fearing the unknown and start thinking about how to work with it.

Sharing small wins and early lessons during implementation also matters. It shows progress and invites input. When employees are kept up to date with real examples of how AI is helping teams or improving outcomes, it makes the transition less abstract. That context helps remove confusion and builds more trust. Communication should stay active and two-way throughout the process.

Job loss is the headline, but it’s rarely the whole story. Most roles change rather than disappear. AI may handle routine tasks, but people remain responsible for context, judgment, and relationships. It’s not about elimination—it’s about evolution. HR and department leads should clarify what is changing, what stays the same, and what new capabilities are needed.

Often, people already have strengths that match the future direction. Their experience, problem-solving, and teamwork aren’t going away. These qualities are amplified when AI takes over the repetitive parts. What matters most is being clear. Tell people how their jobs will change and support them in adapting. When employees see a path forward, they stop bracing for loss and start planning their next move.

Companies should also invest in skills mapping to identify opportunities for growth. By highlighting where existing skills match future needs, employees can be guided toward upskilling instead of worrying about being replaced. Career development plans tied to AI adoption give people a sense of direction and possibility.

Training can’t stop at the "how-to." It needs to show people where AI fits into their daily work. If a planner is expected to use a forecasting tool, they should see how it changes their process, not just how to click through the menu. Make training role-specific and based on real situations.

Learning needs to continue well past the first session. AI tools evolve, and people need time to catch up and adjust. Build in feedback, follow-ups, and peer support. Make it part of normal work, not something extra. People also need room to try, fail, and learn. If they’re worried about making mistakes, they won’t use new tools fully. Encourage experimentation. Create a culture where learning is visible and shared.

This mindset shift is key. People aren't just users of AI; they influence how it works. They notice patterns, suggest improvements, and help the system become more useful. When people are treated as partners, not just recipients, they bring insight that no tool can replace.

The best training environments are the ones that include space for feedback. Workers often spot practical gaps in new systems before developers do. Giving them a voice in how AI gets shaped or adjusted builds ownership. It reminds everyone that AI is a tool in progress, not a finished product.

When AI enters the picture, performance metrics often need to shift. Speed might increase, but so should quality. Workers might do less manual work but spend more time interpreting results or managing outcomes. These contributions aren’t always easy to measure in old terms.

Leaders should explain what success looks like now. Is it better decisions? Smoother collaboration with AI? Fewer mistakes? These are all signs of progress, even if output numbers stay the same. Recognition matters too. When workers learn a new system, adapt quickly, or help others do the same, that deserves attention.

Positive examples spread. When employees see their efforts being valued, it creates momentum. It shows that learning and flexibility count. Over time, that shapes how the whole organization approaches change. AI doesn’t need to replace recognition—it should help create new ways to appreciate human work.

Managers should also revisit evaluation criteria. Encouraging teams to share how they've used AI to solve problems or improve efficiency shifts focus toward innovation. When success includes human judgment and smart use of AI, workers are more likely to see the tools as assets instead of threats.

AI doesn’t transform workplaces—people do. Any new system is only as effective as the support behind it. That support must begin before tools are launched and continue long after. Honest communication, thoughtful planning, relevant training, and updated goals all help workers stay confident and capable. When people are part of the process from the beginning, AI feels less like a takeover and more like a team member. Companies that treat their employees as essential partners in the journey end up with stronger results and smoother transitions. Trust, inclusion, and adaptability are what make AI adoption successful in the long run.

Advertisement

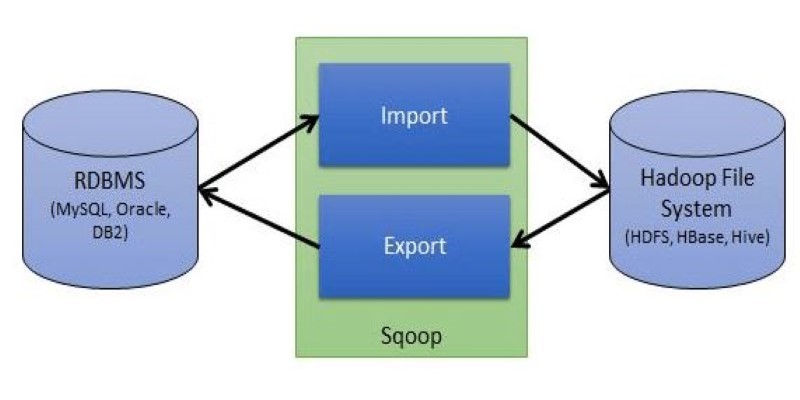

Explore Apache Sqoop, its features, architecture, and operations. Learn how this tool simplifies data transfer between Hadoop and relational databases with speed and reliability

AI saved Google from facing an antitrust breakup, but the trade-offs raise questions. Explore how AI reshaped Google’s future—and its regulatory escape

How can vision-language models learn to respond more like people want? Discover how TRL uses human preferences, reward models, and PPO to align VLM outputs with what actually feels helpful

How IBM and L’Oréal are leveraging generative AI for cosmetics to develop safer, sustainable, and personalized beauty solutions that meet modern consumer needs

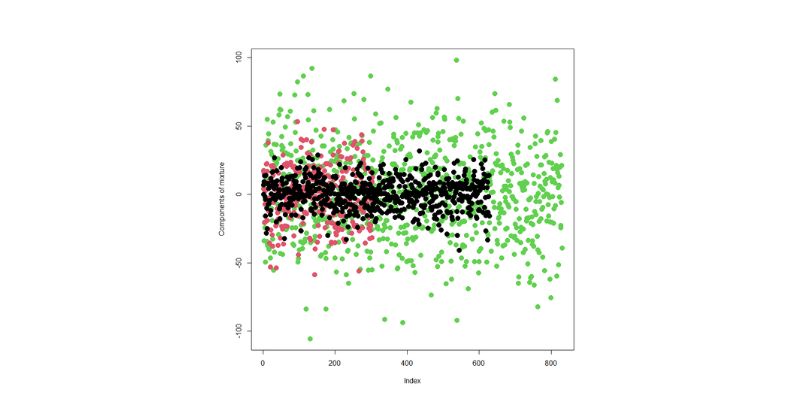

Learn how to compare two regression models with statistical significance for accuracy, reliability, and better decision-making

How Edge AI is reshaping how devices make real-time decisions by processing data locally. Learn how this shift improves privacy, speed, and reliability across industries

Can small AI agents understand what they see? Discover how adding vision transforms SmolAgents from scripted tools into adaptable systems that respond to real-world environments

What's fueling the wave of tech layoffs in 2025, from overhiring during the pandemic to the rise of AI job disruption and shifting investor demands

Learn how integrating feature selection into model estimation improves accuracy, reduces noise, and boosts efficiency in ML

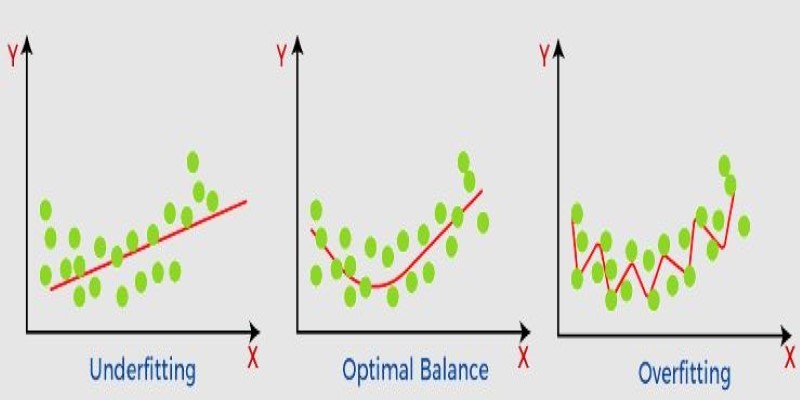

What non-generalization and generalization mean in machine learning models, why they happen, and how to improve model generalization for reliable predictions

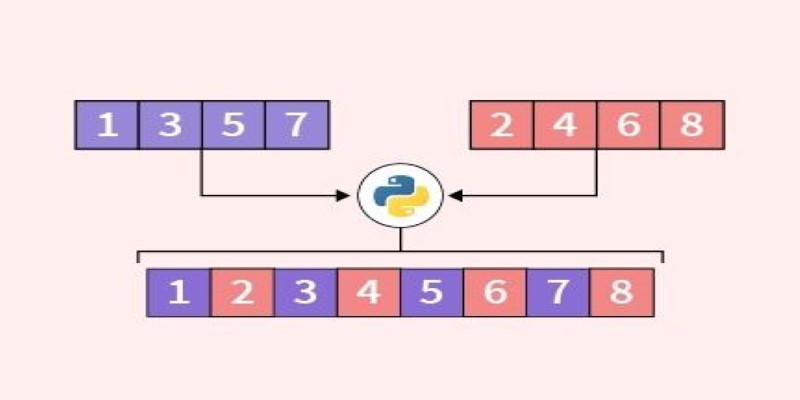

Looking for the best way to merge two lists in Python? This guide walks through ten practical methods with simple examples. Whether you're scripting or building something big, learn how to combine lists in Python without extra complexity

How to approach AI implementation in the workplace by prioritizing people. Learn how to build trust, reduce uncertainty, and support workers through clear communication, training, and role transitions