Advertisement

For years, using artificial intelligence meant typing in just the right command. You’d craft your prompt like a question for a genie, hoping it wouldn’t twist your words or give you something wildly off. Prompting was an art. People published guides and sold templates. But something’s shifted. Prompt engineering—the buzzword of 2022 and 2023—is no longer center stage. A new generation of AI systems is changing the way we interact with machines, and soon, nobody will be talking about prompts at all.

This isn't about better prompts or smarter templates. It's about not needing them. The future isn’t command-driven. It’s context-aware, goal-oriented, and increasingly invisible. The era of prompts is over, and what comes next will feel less like programming and more like conversation, collaboration, or even intuition.

The idea of crafting prompts to get better results was born out of a gap. AI models like GPT-3 or early chatbots needed precise cues. The wrong phrasing meant vague or broken replies. Users had to learn to “speak AI” before the AI could understand them. Now, large language models are being fine-tuned with more context, memory, and awareness of user intent.

Instead of being told what to do, newer systems start to infer it. They remember previous sessions. They track workflows. Tools like GPT-4 with memory, Google Gemini, and others are beginning to behave more like attentive assistants than blank slates.

You don’t prompt them—you talk to them. Or in some cases, you don’t talk at all. They anticipate, suggest, summarize, and complete actions without being asked. This transition removes the clunky middle layer of manual prompting and replaces it with systems that understand users based on patterns, history, and environment.

The early phase of AI interfaces was all about the command line—just text. Chatbots, text boxes, and APIs ruled. But as capabilities grew, so did the demand for more natural interaction. The future of AI interfaces is now heading toward multimodal environments where text is only one way to interact.

Voice, visuals, gestures, and embedded AI systems are becoming more dominant. You won’t always type in what you want. You’ll show it. Say it. Or let the system figure it out by observing. Apps are already moving in this direction: image generators take sketches or references, voice assistants combine commands with screen content, and productivity tools link together inputs from documents, emails, meetings, and files without user prompts at all.

In this new phase, AI becomes less of a destination and more of a layer that exists within your tools. You don’t “go to ChatGPT” or “open the AI app.” It’s just there—editing your writing, suggesting code, improving your slide deck, or fixing your spreadsheet as you work. This quiet, ambient presence marks a clear shift in how AI is accessed and used. It blends in, rather than stands apart.

Prompting forced users to be precise. But the next wave of AI leans heavily on personalization. The systems don’t need you to phrase things perfectly if they already know how you think. They adapt to your tone, preferences, and pace. The future of AI interfaces lies in this personalized layer—learning not just general tasks, but your way of doing them.

Instead of trying to master generic prompts, users will start building relationships with AI that understand their voice, style, and typical actions. Think of an assistant who already knows you prefer short emails, detailed outlines, or certain coding patterns—and applies that automatically.

This is especially powerful when applied to professional work. Writers, analysts, developers, and designers will no longer have to prompt at all. The AI will already know their projects, their recent output, their deadlines, and their quirks. It’s not about teaching a tool every time. It’s about having a tool that already gets it.

This shift removes much of the friction that existed in the prompt era. Instead of stopping to think, “How do I ask this?” users will simply do what they were doing—and the AI will fit in seamlessly.

The next generation of AI tools will do less waiting and more doing. They’ll observe, learn, and suggest in real time. They’ll become context-aware agents that act based on a combination of inputs, not just commands.

Developments in agent-based systems, memory-aware language models, and multimodal AI all point toward an experience that mirrors working with a person. One who remembers your preferences, sees your screen, hears your voice, and adjusts as needed.

There’s a rising trend of tools that don't just answer your prompt—they take steps on your behalf. They navigate dashboards, schedule meetings, write code, fix errors, and sync data. And they do it without you typing out a single detailed instruction.

The future of AI interfaces will likely involve more proactive behavior—AI that asks you questions, notices gaps, or recommends next steps. It might notice that you're editing a presentation and offer design changes based on your past slides. It could suggest responses to messages based on your writing tone and recent conversations. It might even remind you of tasks based on the time of day or previous habits.

Importantly, this will not feel like AI “taking over.” It will feel like workflows are getting smoother. Instead of crafting prompts, users will review, tweak, or accept suggestions. The control stays with the human. But the legwork moves to the machine.

The tools will still be powered by large language models, of course. But the way we use them—and the effort required—will change. AI will go from something we interact with directly to something that supports us quietly in the background.

The era of prompts is over—not because they failed, but because they served their purpose. They helped us bridge the gap between human thought and machine understanding. But AI is now better at picking up context and anticipating needs. We’re entering a phase where AI listens, adapts, and acts with less direction. It’s no longer about typing the perfect command, but working with systems that already understand what comes next.

Advertisement

Discover execution speed differences between C and Rust. Learn benchmarks, optimizations, and practical trade-offs for developers

Know how AI transforms Cybersecurity with fast threat detection, reduced errors, and the risks of high costs and overdependence

Accelerate AI with AWS GenAI tools offering scalable image creation and model training using Bedrock and SageMaker features

Learn how to compare two regression models with statistical significance for accuracy, reliability, and better decision-making

AI saved Google from facing an antitrust breakup, but the trade-offs raise questions. Explore how AI reshaped Google’s future—and its regulatory escape

Learn how to install, configure, and run Apache Flume to efficiently collect and transfer streaming log data from multiple sources to destinations like HDFS

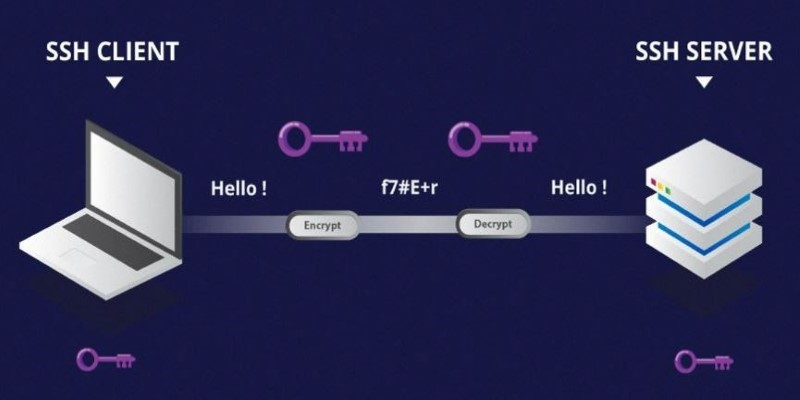

Learn the difference between SSH and Telnet in cyber security. This article explains how these two protocols work, their security implications, and why SSH is preferred today

The era of prompts is over, and AI is moving toward context-aware, intuitive systems. Discover what’s replacing prompts and how the future of AI interfaces is being redefined

How MPT-7B and MPT-30B from MosaicML are pushing the boundaries of open-source LLM technology. Learn about their architecture, use cases, and why these models are setting a new standard for accessible AI

An AI startup has raised $1.6 million in seed funding to expand its practical automation tools for businesses. Learn how this AI startup plans to make artificial intelligence simpler and more accessible

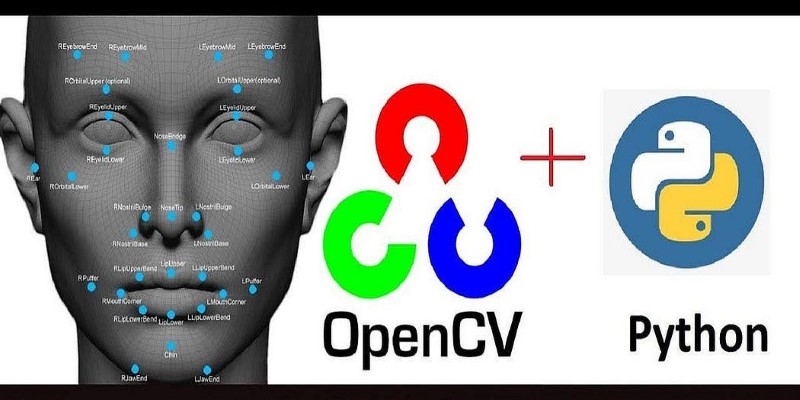

Ready to make computers see like humans? Learn how to get started with OpenCV—install it, process images, apply filters, and build a real foundation in computer vision with just Python

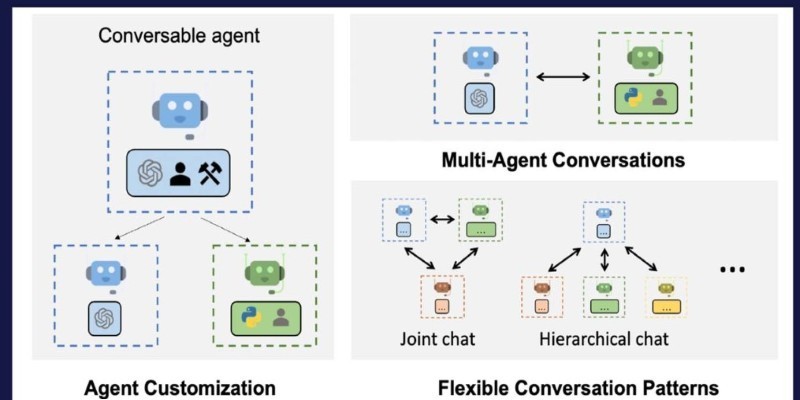

How Building Multi-Agent Framework with AutoGen enables efficient collaboration between AI agents, making complex tasks more manageable and modular